Why We Will Need Millions of LLM Developers? Launching Towards AI’s New One-Stop Conversion Course

From Beginner to Advanced LLM Developer!

Ahead of the weekend, we want to update you all with a one-off edition of our Tuesday newsletter. At Towards AI, we have been focusing on our core strength: LLM Developer expertise. This is a very new field, given these models and techniques have only been available for 2–3 years. With our technical AI publication and network of 2,000 past writers, we had strong access to the early developers, teachers, and hackers in this LLM Builder community.

We have hired great LLM Developers from our network to help us build great tutorials, write our Building LLMs for Production book, and keep you all updated with weekly developments. This talent has also helped develop many internal LLM pipelines and tools, and we have also partnered as consultants with several companies to build their first LLM products.

As a new discipline, however, we think the LLM Developer role is still poorly understood. In this very deep dive, we explain how the LLM Developer role differs from Software Development and Machine Learning and how it is often unappreciated just how critical non-technical skills are to building great LLM products. We also explain why we think technical development on top of foundation LLMs is here to stay and will always provide better results for specific tasks in specific industries relative to out-of-the-box foundation models and no code customization.

Of course, this is also an opportunity to launch our new, extremely in-depth, hands-on, 85+ lesson practical LLM Developer course! We brought together everything we learned from building over the past two years to teach the full stack of LLM skills. It is a one-stop conversion course for software developers, machine learning engineers, data scientists, aspiring founders, or AI/Computer Science students- junior or senior alike. We think many millions of LLM developers will be needed to build reliable customized products on top of foundation LLMs and achieve mass GenAI adoption in companies. We want to help you or your friends and colleagues lead this new field (so please share!).

From Beginner to Advanced LLM Developer

Why should you learn to become an LLM Developer? Large language models (LLMs) and generative AI are not a novelty — they are a true breakthrough that will grow to impact much of the economy. However, achieving this impact requires developing customized LLM pipelines for different tasks, and this will require many millions of LLM Developers. LLM Developer is a distinct new role, different from both Software Developer and Machine Learning Engineer, and requires learning a new set of skills and intuitions.

In our new course, we will teach what we strongly believe is the core tech stack of the future: LLMs, Prompt Engineering, Data Curation, Retrieval-Augmented Generation (RAG), Fine-Tuning, Agents, and Tool Use. These are all techniques to build on top of LLMs and customize them to specific applications. They boil down to ensuring the LLMs have clear and effective instructions (Prompting), can find and use the most relevant data for the task (Data Collection, Curation, and RAG), are adapted towards specific tasks (Fine-tuning), and are connected together in more complex pipelines with access to external tools (Agents). We also teach some of the new non-technical and entrepreneurial skills that are key to this role, such as how to integrate industry expertise and make the right product development decisions and economic trade-offs. We will keep this course continuously updated and aim to make it the gold standard practical LLM Developer certification. Taught via 85 written lessons, code notebooks, videos, and instructor interaction — this is the perfect one-stop conversion course for Software Developers, Machine Learning Engineers, Data Scientists, aspiring founders, or Computer Science Students to join the LLM revolution.

LLMs are already starting to add real value across many industries, from consumer apps to software development, science, finance, and healthcare. Some of these models cost large tech companies towards $1bn to create, yet many “foundation” LLMs are available for you to use at no upfront cost via API or even for free download. These are extremely valuable assets you can now utilize to get a huge headstart in building a new tool or product. The development time and cost can be drastically reduced when building on top of these assets. There is a reason why large tech companies are now spending an annualized rate of over $100 billion on GPUs for training AI models!

We think a large proportion of non-physical human tasks can be assisted to various degrees in the near term with this tech stack, which provides a huge opportunity for individuals, startups, or incumbent companies to build new internal tools and external products. However, a large amount of work has to be delivered to access the potential benefits of LLMs and build reliable products on top of these models. This work is not performed by machine learning engineers or software developers; it is performed by LLM developers by combining the elements of both with a new, unique skill set. There are no true “Expert” LLM Developers out there, as these models, capabilities, and techniques have only existed for 2–3 years.

From Beginner to Advanced LLM Developer is a conversion course for Software Developers, Machine Learning Engineers, or Data Scientists to transition into an LLM Developer role or at least to add this skillset to their repertoire. The core principles and tools of LLM Development can be learned quickly. However, becoming a truly great LLM developer requires cultivating a surprisingly broad range of unfamiliar new skills and intuitions. This includes more full-stack code, product development, communication, entrepreneurial and economic strategy skills, and bringing in more industry expertise from your target market. We teach the LLM Developer technical skillset together with practical tips and new non-technical considerations that are key to the role. The course shares code and walks through the full process for building, iterating, and deploying a complex LLM project, the AI Tutor chatbot accompanying the course. To complete the course and receive certification, you will build and submit your own working LLM RAG project. This is the perfect opportunity to take an early lead in developing the LLM developer skillset and go on to become one of the first experts in this key new field!

Towards AI has been teaching AI for over 5 years now, and over 500,000 people have learned from our tutorials and articles. You may have also read our best-selling LLM book “Building LLMs for Production” or taken our three-part GenAI:360 course series, which we released last year in partnership with Intel and Activeloop and reached over 40,000 students. This new course, however, is at another level, built by over 15 LLM developers on our team over the past year, it teaches much more depth and practical experience than our prior tutorials, courses, and books. In fact, with 85 lessons, we think this is the most comprehensive LLM developer course out there. You will also have access to our instructors in the dedicated course channel within our 60,000-strong Learn AI Together Discord Community. The course will also be regularly updated with new lessons as new techniques and tools are released.

Sign Up Today for From Beginner to Advanced LLM Developer

The first part of the course includes 60 code-heavy lessons covering the full development process of a single advanced LLM project — all the way from model selection, dataset collection, and curation to RAG, advanced RAG, fine-tuning, and deploying the model for real-world use. Part 2 of the course introduces many more tools and skills that can be added to the LLM developer’s toolkit. Part 3 of our course will help you decide which LLM project to build yourself, give examples of successful LLM products, teach factors such as product defensibility and suitability to different industries and workflows, and the broader economic impact of Generative AI. The fourth and final part of the course is your practical project submission, which, together with earning your certification, could function as the perfect portfolio project for your CV or the Proof of Concept for a new tool at your company.

There are many options for tools, frameworks, and models to use during LLM development — we aim to keep this course impartial and discuss many alternative options for each. While we naturally demonstrate different code projects with specific models and tools, many of these can easily be switched for your own projects. During this course, you will learn how to use LLM model families such as OpenAI’s 4o, Google Deepmind’s Gemini, Anthropic’s Claude 3.5, and META’s Llama 3.1. While most lessons make use of the LlamaIndex LLM framework, you will also be introduced to Langchain, and many other useful LLM developer tools and platforms.

Over 3 million people have taken early steps experimenting with LLM APIs using platforms such as OpenAI, Anthropic, Together.ai, Google Gemini, Nvidia, and Hugging Face. This course takes you much further and walks you through overcoming LLM’s limitations, developing LLM products ready for production, and becoming a great full-stack LLM developer! We hope taking this course will allow you to take part in the LLM revolution and not be left behind by it. It may enable you to create a revenue-generating side project, the seed of an ambitious startup, or a personal tool for your admin or hobbies. It could help you land one of the 10,000+ live AI jobs requiring parts of this skill set listed on our AI jobs board. Alternatively, it may allow you to bring these new skills into your current job and lead a new LLM project or team at your company.

It is important to reiterate that this course is heavily focused on learning via practical application, and we keep the theory to the minimum needed to build strong LLM products. We cut to only teaching theory specifically useful for adapting LLMs and not that needed for training machine learning models. Therefore, some of the broader theories behind ML and LLMs are only touched on at a high level so that students can form an idea of the bigger picture and enable them to put the LLM skills we teach into context. Many of the key skills and topics we cover in this course are progressively taught in more and more detail throughout the 85 lessons as we introduce more complexity to our project examples. Many of the skills and technical terms touched on in this lesson will be explained in the early lessons of the course.

Why Do We Need To Develop Custom LLM Pipelines?

LLMs are already beginning to deliver significant efficiency savings and productivity boosts when assisting workflows for early adopters. For example, Klarna reported $40m savings from their customer assistant with a model that likely costs below $100k to run. The Amazon Q LLM coding assistant saved Amazon 4,500 developer years and delivered $260m in efficiency gains during a recent Java upgrade. Huge standalone LLM pipeline businesses are also being built, with Perplexity AI, a RAG, and fine-tuning focused pipeline built on foundation LLMs, reportedly now raising at a $8bn valuation. Harvey AI, a legal industry-focused LLM pipeline, raised $1.5bn this year (we include a deep dive on how both these products were built later in the course!). At an earlier stage, 75% of startups in Y combinator’s August 2024 cohort focussed on AI-related products.

Still, we are very, very early in the adoption cycle, and it inevitably takes a lot of time for enterprises to explore, test, adopt, or develop new technologies. In particular, LLM “hallucinations” and errors are key issues, and the reliability threshold is often the missing piece for the wide-scale enterprise rollout of LLM solutions. We will cover these AI model mistakes in detail in the early lessons of this course. Despite significant efforts by central AI labs and open-source developers to increase LLM capabilities and to adapt “foundation” LLMs to human requirements, off-the-shelf foundation models still have limitations and flaws that restrict their direct use in production. After factoring in the time spent getting ChatGPT or Claude working for your tasks and then diligently checking and correcting its answers, the productivity gains are quickly reduced.

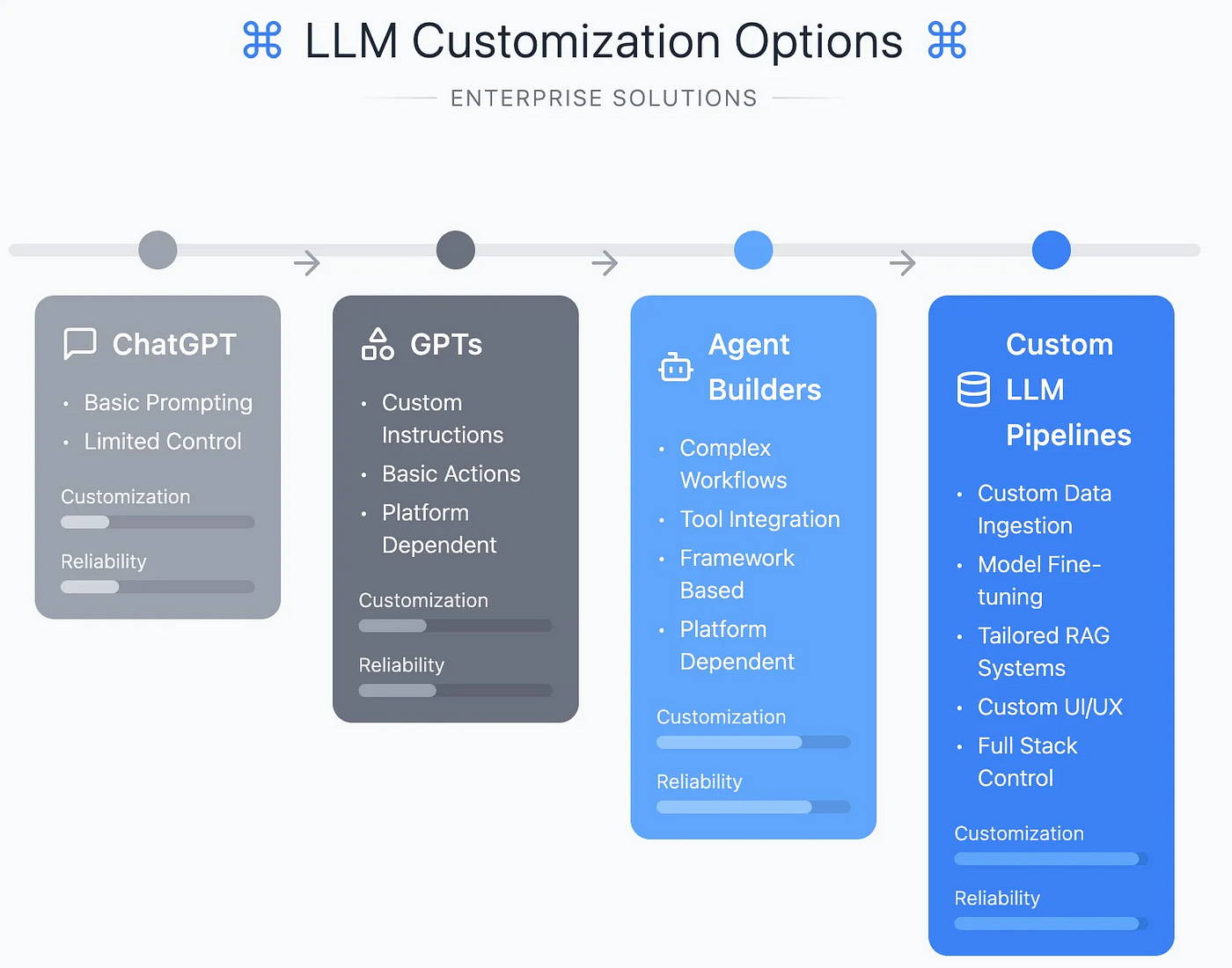

These problems can be partially solved by task customization and internal data access via no-code Agent/GPT builder platforms. We think agent builder platforms such as Microsoft Copilot Studio agents and Enterprise adoption of Chatgpt are likely to be a taster and stepping stone for companies towards exploring the huge benefits of LLMs. However, we still believe a lot more flexibility and hard work is needed to truly optimize an LLM for specific datasets, companies, and workflows and to get the best possible reliability, productivity gains, and user experience. This work can’t be fully achieved with no-code tools and without knowing how LLMs work. In our view, custom LLM pipelines are really needed in Enterprises. We expect a huge wave of enterprise development of in-house customized LLM tools together with an exploration of the best-in-class third-party LLM pipelines built for specific workflows and industries. This also creates huge opportunities for individual developers to take part in the development effort or to launch a new startup or side project. We can even envision a future where almost every team at most companies — technical or non-technical — has its own LLM Developer. Helping to find and test external products or build the best customized LLM pipelines adapted for the team’s data and workflows.

Given the rapid pace of progress in LLM technology, strong competition at big tech companies for the state-of-the-art model, and the different strengths and weaknesses of each AI lab — we also think it is important not to build dependency on a single LLM provider. Therefore, LLM pipelines and customizations such as RAG, fine-tuning, and agent frameworks should be built using your own software or third-party frameworks, allowing easy switching of the foundation models used in your product.

Once you have decided to build your own custom LLM pipeline, the initial decision is which LLMs to use and whether to use a closed LLM via API or a more flexible platform where you have full access to open-source model weights. The next decisions are how to acquire and prepare a custom dataset for your pipeline and which techniques to use to improve and tailor the LLM’s performance to your industry and use case. In particular, this includes data collection, scraping, data cleaning and structuring, prompting, RAG, fine-tuning, tool use, agent pipelines, and customized user interface. Some people may also want to experiment with training their own LLMs from scratch; however, in our opinion, this will now rarely be practical or economical outside the leading AI labs and tech companies, so we don’t cover training in this course. Why struggle and spend to train a model yourself when you can instead quickly build upon one that costs a leading AI lab towards $1bn to train?

Customized LLM Pipelines and End User LLM Education Will Unlock Faster Adoption

While LLM “hallucinations” are still an issue, we already have many methods to address them. A key point often missed in the discussion is also that humans themselves are also not perfect, and we already have experience making workflows robust to the mistakes of junior colleagues. The progress towards more reliable self-driving cars has popularly been referred to as “the march of the 9s” — iteratively solving digits of reliability for the system. For example, the journey from 90% to 99% and then 99.9% accuracy. It is relatively easy to get an impressive demo together, but it takes increasing amounts of work, iteration, and complexity to solve more and more edge cases, reduce failures, and get the system ready to deploy in the wild. We think LLM pipelines are also on their own “march of 9s,” and the reliability of LLM applications has the potential to cross a key reliability threshold for assisting with many more tasks at enterprises in the near future. This exact threshold depends on the ease of fixing errors and the downside risk of missing them and varies significantly case by case. We expect progress due to:

More development work of advanced custom LLM pipelines improving reliability and ease of use for specific tasks (via RAG, fine-tuning, agents, data preparation, prompting, etc.)

Better employee education (how to use and build with LLMs, where not to use it, where not to trust it, why not to fear it),

Better foundational LLMs (in particular with better reasoning capabilities).

Crucially, progress on any of these drivers individually could be enough to allow Generative AI to contribute huge economic value to a new workflows. We think those using the technology now will be best prepared to create value as new models are released and new capabilities are unlocked.

Often, we think the adoption of LLMs is also slowed down by user “skill” issues. People get disappointing results from any LLM pipeline when they don’t understand LLMs, don’t have the imagination for how they could use it, don’t understand how to communicate with it, and don’t understand how to work “with it” synergistically. For example, someone might have a clear image of what the perfect response looks like — but cannot clearly articulate this in language. Hence, they are not giving the model the instructions it needs, and they get poor results. LLM Developers can help in this process by building user education and ease of use into the product design.

We also think it is important to address the fears of your end users; many people are scared about AI taking their jobs and do not want to use tools that take on some of their responsibilities. It is important to make it clear these LLM tools are not here to replace workers but instead to empower them. Rather than being replaced by AI tools, we do however think there is a very real risk of “AI hesitant” employees losing their jobs across many industries to people who do learn to use these tools effectively. As an early example here we recently watched Ignite Tech’s CEO at the FT Live AI conference discuss how he had replaced 80% of his employees over 15 months because they were not demonstrating they were fully on board with his “AI First” transformation!

Competitive and Obsolesce Risks Come From NOT Using LLM Tools

Some people have questioned the business risk and defensibility of building on top of LLMs and dismissively write them off as “wrapper” businesses. We disagree and actually think building LLM pipelines is often now the safest and most rational choice for developing any new business or tool. Of course, there is far more to building a successful business or product than just the LLM pipeline code within it. For some products, the LLM pipeline will provide the bulk of the functionality while for others, it will be a much smaller part and combine with more traditional software or different types of machine learning models.

Building LLM pipelines can help you deliver anything from personal side projects and small businesses to huge $100m+ enterprise cost savings and even unicorn startup scale new products (such as Perplexity). If you choose not to make use of these ~$1bn ready-built foundation LLM assets in your product because you fear competitors copying your LLM pipeline — these competitors can use these models to compete with your non-LLM enhanced product anyway! We often see the “sunk cost” fallacy at play here — CEOs may not be willing to accept their existing assets or expertise can be replaced by foundation LLMs and consequently make the poor economic and product decision to not make use of them.

Many LLM projects can also provide value, time, or cost savings to individuals or teams, even if they are not ultimately aiming to be defensible external revenue-generating products. However, huge long-term businesses can be built on top of LLMs, too. Defensibility is possible and primarily comes down to choosing to tackle an industry niche or workflow where you 1) Have a very specific internal workflow or product in mind where a customized LLM pipeline will get improved results or 2) You already have or plan to develop a data or industry expertise advantage and build this directly into your product.

New LLMs and new capabilities will continue to be released at a rapid pace, and these will integrate more and more features natively. These will unlock new capabilities, but today’s LLM developer stack and LLM pipelines are transferable and will also be essential for adapting next-generation models to specific data, companies, and industries. LLM-driven productivity can be extremely valuable, and we expect companies to pay for the best-in-class and most reliable LLM pipeline for their use case. This best-in-class customized product will create the most value for companies. Foundation model capabilities will get better, but we expect your custom pipeline, custom dataset, tailored RAG pipeline, hand-picked fine-tuning and multi-shot task examples, custom evaluations, custom UI/UX, and thorough understanding of your use case and customer’s problems will always add incremental reliability and ease of use. The latest “ChatGPT” update or latest new foundation LLM may, at times, leapfrog the performance of your custom LLM pipeline temporarily, but you can immediately transfer your LLM pipeline on top of this latest LLM and regain the lead on your niche tasks.

Those using the models of today are best placed to take advantage of the models of the future! We focus on teaching the core principles of building production-ready products with LLMs, which will keep this course relevant as models change. We also think the new non-technical entrepreneurial and communication skills you need to learn as an LLM Developer are harder to automate than many other areas of software.

How Do LLM Developers Differ From Software Developers and ML Engineers?

Building on top of an existing foundation model with innate capabilities saves huge amounts of development time and lines of code relative to developing traditional software apps. It also saves huge amounts of data engineering expertise, machine learning, infrastructure experience, and model training costs relative to training your own machine learning models from scratch. As discussed, we believe that to maximize the reliability and productivity gains of your final product, LLM developers are essential and need to build customized and reliable apps on top of foundation LLMs. However, all of this requires the creation and teaching of new skills and new roles, such as LLM developers and prompt engineers. And great LLM developers are in very short supply!

Software Developers primarily operate within “Software 1.0”, focusing on coding for explicit, rule-based instructions that drive applications. These roles are generally specialized by software language and skills. Many developers have years of experience in their field and have developed strong general problem-solving abilities and an intuition for how to organize and scale code and use the most appropriate tools and libraries.

Machine Learning Engineers are focused on training and deploying machine learning models (or “Software 2.0”). Software 2.0 “code” is abstract and stored in the weights of a neural network where the models essentially program themselves by training on data. The engineer’s job is to supply the training data, the training objective, and the neural network architecture. In practice, this role still requires many Software 1.0 and data engineering skills to develop the full system. Roles can be specialized, but expertise lies in training models, processing data, or deploying models in scalable production settings. They prepare training data, manage model infrastructure, and optimize resources to train performant models while addressing issues such as overfitting and underfitting.

Prompt Engineers focus on interacting with LLMs using natural language (or “Software 3.0”). The role involves refining language models’ output through strategic prompting, requiring a high-level intuition for LLMs’ strengths and failure modes. They provide data to the models and optimize prompting techniques and performance without requiring code knowledge. Prompt Engineering is most often a new skill rather than a full role — and people will need to develop this skill alongside their existing roles across much of the economy to remain competitive with their colleagues. The precise prompting techniques will change, but at the core, this skill is simply developing an intuition for how to use, instruct, and interact with LLMs with natural language to get the most productive outputs and benefit from the technology.

LLM Developers bridge Software 1.0, 2.0, and 3.0 and are uniquely positioned to customize large language models with domain-specific data and instructions. They select the best LLMs for a specific task, create and curate custom data sets, engineer prompts, integrate advanced RAG techniques, and fine-tune LLMs to maximize reliability and capability. This role requires understanding LLM fundamentals and techniques, evaluation methods, and economic trade-offs to assess an LLM’s suitability to target workflows. It also requires understanding the end user and end-use case. You are bringing in more human industry expertise into the software with LLM pipelines — and to really get the benefits of customization, you need to better understand the nuances of the problem you are solving. While the role uses Software 1.0 and 2.0, it generally requires less foundational machine learning and computer science theory and expertise.

We think both Software Developers and Machine Learning Engineers can quickly learn the core principles of LLM Development and begin the transition into this new role. This is particularly easy if you are already familiar with Python. However, becoming a truly great LLM developer requires cultivating a surprisingly broad range of unfamiliar new skills, including some entrepreneurial skills. It benefits from developing an intuition for LLM strengths and weaknesses, learning how to improve and evaluate an LLM pipeline iteratively, predicting likely data or LLM failure modes, and balancing performance, cost, and latency trade-offs. LLM pipelines can also be developed more easily in a “full stack” process, including Python, even for the front end, which may require new skills for developers who were previously specialized. The rapid pace of LLM progress also requires more agility in keeping up to date with new models and techniques.

The LLM Developer role also brings in many more non-technical skills, such as considering the business strategy and economics of your solution, which can be heavily entwined with your technical and product choices. Understanding your end user, nuances in the industry data, and problems you are solving, as well as integrating human expertise from this industry niche into your model, are also key new skills. Finally — LLM tools themselves can at times be thought of as “unreliable interns” or junior colleagues — and using these effectively benefits from “people” management skills. This can include breaking problems down into more easily explainable and solvable components and then providing clear and foolproof instructions to achieve them. This, too, may be a new skill to learn if you haven’t previously managed a team. Many of these new skills and intuitions must be learned from experience. We aim to teach some of these skills, thought processes, and tips in this course — while your practical project allows you to develop and demonstrate your own experience.

As CEO of an AI education and consultancy company and advisor to several AI startups, I have overseen the creation of many LLM pipelines to assist with internal workflows, as well as to deploy to help our users. These products include our AI Tutor and AI Jobs Board (currently showing 40,000+ live AI jobs) and lesson writing tools. Our LLM-enhanced jobs data pipeline continuously searches for jobs that meet our AI criteria and regularly remove expired jobs. We make it much easier to search for and filter by specific AI skills (including via a chat interface), and we also allow you to set email alerts for jobs with specific skills or from specific companies. This rich dataset of skills now desired by companies also informed our choice of AI skills to teach students! We have also built LLM pipelines for external companies and startups as part of our LLM enterprise training and consultancy business line. In this course, we will teach you to replicate some of the things we have built and how to think about which LLM pipeline workflows or products make the most sense for you to build yourself.

Course Prerequisites

It is now very easy to use and, to some extent, even build rudimentary apps with large language models with no programming skills, no technical knowledge of AI, and limited mathematical ability. Even as a developer — to start building generative AI apps — you generally only need a basic grasp of Python to get started and no understanding of the inner workings of AI, neural networks, and LLMs. This is in part because the “Foundation” LLM you use already has a huge amount of capability and does much of the work for you — making the remaining coding much less intensive than with traditional software. It is also helped by new LLM-powered coding assistants such as Github Copilot and Cursor, as well as the ability to question LLMs such as Chatgpt for coding ideas and explanations.

Nevertheless, no-code LLM development still has many limitations, and building your own advanced production-ready apps and products using these models still requires a significant development effort. Hence, this course requires knowledge of Python or at least high confidence in software development and a willingness to learn Python in parallel. Python is known for its simple and intuitive syntax that resembles the English language, so it is generally quick to pick up for a confident developer (particularly if using LLM tools to help explain and teach as you go); however, Python basics are not taught as part of this course.

💡 Python knowledge is a prerequisite.

We are currently finishing work on another course, Python Primer for Generative AI: From Coding Novice to Building with LLMs. This new course is available for pre-order and will be the perfect foundation for non-developers to prepare to take From Beginner to Advanced LLM Developer. In the meantime, if you have no knowledge of computer science or Python, please first spend some time learning about Python and computer science from external resources before taking this course.

💡 Knowledge of AI or mathematics is NOT a prerequisite.

We think understanding the key workings of LLMs and how they are made gives better intuition for their strengths and weaknesses, more understanding of where extra features need to be built into LLMs (either with code, data in context, or expertise and instructions in prompts) and better ability to make the most of them. However, we think the full theoretical understanding of machine learning fundamentals and how to train LLMs is not necessary to create useful projects and products with these technologies. Therefore, knowledge of AI or mathematics is not a prerequisite.

💡 We will not get bogged down in theory, but we will teach you a first principles understanding of how LLMs work, which helps you build better apps and Generative AI workflows.

Course Syllabus

The LLM tech stack lends itself to “modular” product development — where you can iteratively build a more complex, reliable, and feature-complete product by adding and replacing elements of a core LLM pipeline. We like this method of first building a full stack Python “Proof of Concept” (PoC), including front-end deployment using a Python framework. This will integrate the easiest “LLM quick wins” for your identified niche and workflow (again, you see why some elements of business strategy and economics are entwined with this role). After this, you can use an evaluation framework, testing, and feedback to iteratively improve parts of your LLM pipeline as you advance the project and add features toward a “Minimum Viable Product.” At some point in this journey, you will likely also build a more custom front end, pulling in expertise from front-end software languages. This is the method we teach in this course, and it is suitable for building anything from personal hobbies and side projects to startup MVPs, as well as enterprise proofs of concepts for both internal workflows and external products.

Course Part 1

In part 1 of the course (60 lessons), we teach core LLM and RAG techniques through the development of an AI Tutor, a chatbot designed to answer AI-related questions. This part of the course covers a wide range of foundational topics, starting with data collection, scraping, parsing, and filtering and then progressing to constructing a basic RAG pipeline. We also introduce many advanced RAG techniques to optimize data retrieval and enhance the model’s contextual accuracy. You’ll learn to work with vector databases, build RAG applications, and leverage tools like LlamaIndex for more efficient data handling. We also teach fine-tuning both for LLMs and embedding models. A significant portion of the course focuses on ensuring that only the most relevant data (or “chunks”) are retrieved and processed using techniques like query augmentation, metadata filtering, re-ranking, and advanced retrieval methods. We also explore more advanced options such as using ReAct Chat for multi-turn conversation, implementing native audio interaction capabilities, and making use of long context windows with context caching for more in-depth research and more complex user conversations about a dataset. We conclude this part by showing how to deploy and share a proof of concept using API, Gradio, and Hugging Face Spaces, enabling you to build a complete, functional chatbot.

Part 1 is taught using one-off Colab notebooks, written lessons, and videos, all designed to be hands-on. As you work through the material, you’ll be encouraged to “learn by building,” combining the techniques from these lessons into a final, working AI Tutor. The course emphasizes the experimental nature of building RAG pipelines and LLM applications, where trial and error are integral to the process. When building, you don’t already know the right answer — you try out techniques that might not work for your use case, and you hack together initial solutions that you might later decide are worth investing time to improve and replace. We don’t just explain the final model step by step — but instead show the paths we took to get here. As such — not all the techniques we taught will be integrated into our final model — some we experimented with and didn’t lead to improvements, some are not worth the cost and latency trade-offs, and some are better suited to different use cases or datasets.

At the end of part 1 of the course, we hope you will have replicated a version of our AI tutor, working with the dataset we provide (a smaller subset of our own full AI Tutor dataset).

Course Part 2

In part 2 of the course (15 lessons), we will focus on teaching other LLM tools and AI models that we didn’t use but that you may want to integrate into your own LLM project. We want you to start thinking about what is possible with LLMs and consider a richer array of tools and capabilities than we taught in part 1 for our specific AI Tutor application. We cover topics from diffusion models to LLM agents to other useful APIs that can be used in LLM pipelines. We also cover more details of LLM deployment and optimization, such as pruning, distillation, and quantization. We will describe and give tips on using these tools and platforms and provide links to documentation, but we will not give full practical project examples for all lessons in this section.

Course Part 3 (Optional)

In part 3 of the course (9 lessons), we provide extra thoughts and materials from our founder and CEO to help you think through the future of AI as an industry and exactly what app, use case, or niche you want to build for yourself or your company. He brings thoughts from 6 years of building, advising, and investing in machine learning startups and AI products, together with business and investment understanding from past experience as a lead analyst in Investment Research at J.P. Morgan.

We think one of the key barriers to LLM adoption is imagination and the willingness to adapt work processes to new LLM capabilities. It can be very hard to think through exactly how you can get value out of LLMs in your daily personal or professional life or what workflows and products are best suited to enhancing with an LLM pipeline. This is true both from a non-technical perspective and working out how to prompt and use models, but also from a technical perspective and working out where to get started in building your own LLM pipelines. This optional section of the course aims to help you think through how LLM adoption will play out and what you could build for yourself or your company.

With such fast-moving technology, it is very difficult to know which tasks LLMs can assist with or automate both today and tomorrow. What will a successful LLM product look like? How do we choose between chatbots, copilots, autopilots, and full automation? Will LLM value be captured by foundation model companies, LLM startups, early corporate adopters of the technology, or end users? What will lead to a defensible LLM product? Which industries and tasks are well suited to benefit from these technologies already? We share examples of successful products people have built with LLMs and RAG and try to inspire you to think of your own original idea for building your own AI app, something that is meaningful to you, your hobbies, your work, or your desired career path.

Course Part 4

In the final part of the course, you will build and submit your own advanced LLM app.

This final project could just be your compilation of a custom version of our own AI tutor chatbot from our code in part 1 (but with a dataset that is expanded and filtered using the techniques we teach). However, we highly encourage you to think of a different and original application that matches your interests or industry experience. This could be an AI tutor for another industry, a more in-depth RAG research assistant, a customer support chatbot, or a pipeline to collect, clean, structure, and search unstructured data, among many other possibilities! We only ask that the project uses RAG within the tech stack and foundation model LLMs (which may or may not be fine-tuned). Your project will need to integrate a minimum number of advanced LLM techniques taught in our course. We teach you how to deploy your app via API or on Hugging Face Spaces, but you may also build a custom front end.

We will review your product and code at a high level to ensure it works and includes sufficient customization. Then, we will award you certification for completing the course and developing a working LLM product.

We hope this project will serve you either as a portfolio project for landing a job in AI, as the Minimum Viable Product (MVP) for launching your own startup or side project, or as a Proof of Concept (PoC) for a custom LLM project at your company or clients. We will also reach out to the creators of the most interesting and novel project submissions and explore possibilities of helping you incubate your project or find work in the industry with us or any of our partners.

Learning Resources

To help you with your learning process, we are sharing our open-source AI Tutor chatbot to assist you when needed. This tool has been created using the same tools we teach in this course. We build a RAG system that provides an LLM with access to the latest documentation from all significant tools, such as LangChain and LlamaIndex, including our previous courses. If you have any questions or require help during your AI learning journey, whether as a beginner or an expert in the field, you can reach out to our community members and the ~15 writers of this course in the dedicated channel (space) for this course in our 60,000 strong Learn AI Together Discord Community.

Sign Up Today for From Beginner to Advanced LLM Developer at Towards AI Academy

https://academy.towardsai.net/courses/beginner-to-advanced-llm-dev

Spot on. The transition from Software 1.0 to LLM development isn't just about learning Python; it's about shifting from 'deterministic logic' to 'probabilistic flows.'

However, there's one more role we'll need millions of: AI Reliability Engineers. Most LLM pipelines today are still too fragile for production because they treat sessions as volatile. If we want mass GenAI adoption, we need to move from 'wrapper' thinking to 'durable runtime' thinking. An agent that can't survive a simple server restart or a long-running tool timeout is just a demo, not a product. Durable execution is the missing link in this new tech stack.

Don't hesitate any longer, put your hands on the keyboard and begin your journey to becoming a music legend with FNF https://fnfgo.pro . Exciting challenges and catchy tunes await you – play and feel the heat of the stage now!