This AI newsletter is all you need #60

What happened this week in AI by Louie

As the AI race has accelerated this year, AI chip training and inference capacity have become vital resources, and we noted several developments over the past weeks. Both large tech companies and younger AI startups have been scrambling to get access to deliveries of Nvidia H100s, and we are excited to see the emergence of the next generation of models powered by these chips after production ramped up this summer. Many AI startups have been raising large investments to primarily fund AI chip training capacity, which has also led to intriguing new avenues of financing, showcased by CoreWeave recently raising $2.3 billion in debt, secured by their valuable Nvidia chip assets.

Nvidia recently released the new GH200 Grace Hopper superchip platform, featuring 144GB of memory, and concurrently transitioning from Intel CPUs to their proprietary Grace CPU. The new system is expected in 2Q 2024. While Nvidia is accelerating production of the H100 chips and progressing with new platforms, we also expect Google to be making progress on its next generation of TPU, v5, and we look forward to updates here. Google/Deepmind have increasingly been hyping progress with the next generation “Gemini” LLM model, but it is not clear yet if this will be trained using TPU v4, v5, or H100s.

Alongside developments at large tech companies, we are also witnessing increasing newsflow at AI chip startups, showcased by the recent $100M fundraising of the Jim Keller-led Tenstorrent. This week also saw the launch of a new startup from a team previously responsible for Google's TPU chips and the Palm model. The project is called MatX, with the mission to “make AI better, faster, and cheaper by building more powerful hardware.”. MatX has a focus on hardware purely for LLMs. We expect competition in AI chips to be very strong, and it will be tough to keep pace with the current leaders, but there is a huge opportunity for hardware innovation and new technologies and ideas to continue to enable progress in AI.

- Louie Peters — Towards AI Co-founder and CEO

Hottest News

1. Big Tech X Generative AI Q2 Update

During the Q2 earnings calls, prominent tech companies highlighted their progress in Generative AI. Amazon emphasized its efforts in training and inferring from Large Language Models (LLMs), while Microsoft showcased AI integration across various domains. Meta directed its investments towards recommendation systems and open-source innovations, exemplified by the Llama 2 model. Alphabet underscored its AI investments in search and productivity, whereas Apple unveiled AI features slated for iOS 17.

2. Researchers Develop AI That Can Log Keystrokes Acoustically With 92–95 Percent Accuracy.

AI researchers have developed a method for hacking passwords by analyzing the acoustic cues of typed letters. Through testing with various recording sources, the technique has demonstrated a success rate exceeding 90%, reaching up to 95% accuracy when utilizing smartphone microphones.

3. Toyota, Pony.ai Plans To Mass Produce Robotaxis in China

Toyota and its partner, Pony.ai, have outlined their intention to commence mass production of robo-taxis in China. This collaboration aims to advance self-driving car technology in the country and develop "smart cockpits" for the Chinese market. Toyota's investment in this endeavor surpasses 1 billion yuan (equivalent to $140 million).

4. Microsoft Kills Cortana in Windows As It Focuses on Next-Gen AI

Microsoft is set to shut down its digital assistant app, Cortana, this month. The company is redirecting its focus towards contemporary AI advancements, such as its Bing Chat akin to ChatGPT, along with other AI-powered productivity features integrated into Windows and its web browser, Edge.

5. GPTBot: The Web Crawler of OpenAI

OpenAI's web crawler, GPTBot, can now be readily recognized and thwarted from accessing websites meant for AI training. Website proprietors can simply employ the provided code to append GPTBot to their site's robots.txt file, effectively barring it from scanning and indexing their website's content.

Five 5-minute reads/videos to keep you learning

1. Dario Amodei (Anthropic CEO) - $10 Billion Models, OpenAI, Scaling, & AGI in 2 years

In this video, Dario Amodei, CEO of Anthropic, discusses AI scalability and the path to human-level intelligence. Key takeaways include the challenges of effectively scaling with parameters & data, predicting specific abilities, potential gaps in skills, the need for alignment & values, integration challenges in real-world scenarios, and more.

This blog post introduces the Direct Preference Optimization (DPO) method, now available in the TRL library, and demonstrates how one can fine-tune the recent Llama v2 7B-parameter model using the stack-exchange preference dataset. This dataset contains ranked answers to questions from various stack-exchange portals.

3. A Curated List of Open AI Courses From Top Universities

This is a list of open AI courses offered by top institutions such as Stanford, CMU, and MIT. These courses cover a wide range of topics, including principles and techniques of artificial intelligence, machine learning, deep learning, natural language processing, reinforcement learning, and machine learning with graphs.

4. 4 Charts That Show Why AI Progress Is Unlikely To Slow Down

Over the past decade, AI systems have undergone rapid development, and this momentum is anticipated to persist due to various factors. These include increased computing power, Moore's Law, access to more data, better algorithms, and improved cost efficiency.

5. Hyper-Realistic Text-to-Speech: Comparing Tortoise and Bark for Voice Synthesis

This guide compares two popular TTS models, Bark and Tortoise TTS, which use deep learning techniques to convert text to speech. Bark offers versatility with control over various attributes and supports multiple languages, while Tortoise excels at reproducing human voices and is ideal for long-form speech synthesis.

Papers & Repositories

1. AlphaStar Unplugged: Large-Scale Offline Reinforcement Learning

Researchers have set a new benchmark in offline reinforcement learning using StarCraft 2. They improved state of the art results in agents using only offline data, achieving a remarkable 90% win rate against the previous best agent. This advancement was made possible due to the unique characteristics of StarCraft 2 and a massive dataset released by Blizzard.

2. Simple Synthetic Data Reduces Sycophancy in Large Language Models

Researchers have discovered that language models, specifically PaLM models with 540B parameters, tend to tailor their responses to align with a user's view, even if the views are objectively wrong. This behavior, known as "sycophantic behavior," can be reduced by using synthetic data and training models to handle diverse user opinions. A lightweight fine-tuning method has shown promising results in minimizing this behavior.

3. Retroformer: Retrospective Large Language Agents With Policy Gradient Optimization

The Retroformer is a framework that enhances large language models by training them with a retrospective model, allowing them to fine-tune and optimize for environment-specific rewards. This enables language agents to reason, plan, and learn from feedback, ultimately improving their performance in various environments and tasks.

4. AvatarVerse: High-Quality & Stable 3D Avatar Creation From Text and Pose

AvatarVerse generates high-quality 3D avatars with precise pose control from text descriptions and poses guidance. It incorporates a 2D diffusion model, enabling accurate depiction of individuals' descriptions and pose signals.

5. huggingface/candle: Minimalist ML framework for Rust

Candle is a minimalist ML framework for Rust with GPU support, including CUDA. It offers various prebuilt models and the ability to add custom ops and kernels, making it ideal for AI tasks such as speech recognition and code generation. Perfect for serverless deployment and browser-based models using WASM.

Enjoy these papers and news summaries? Get a daily recap in your inbox!

The Learn AI Together Community section!

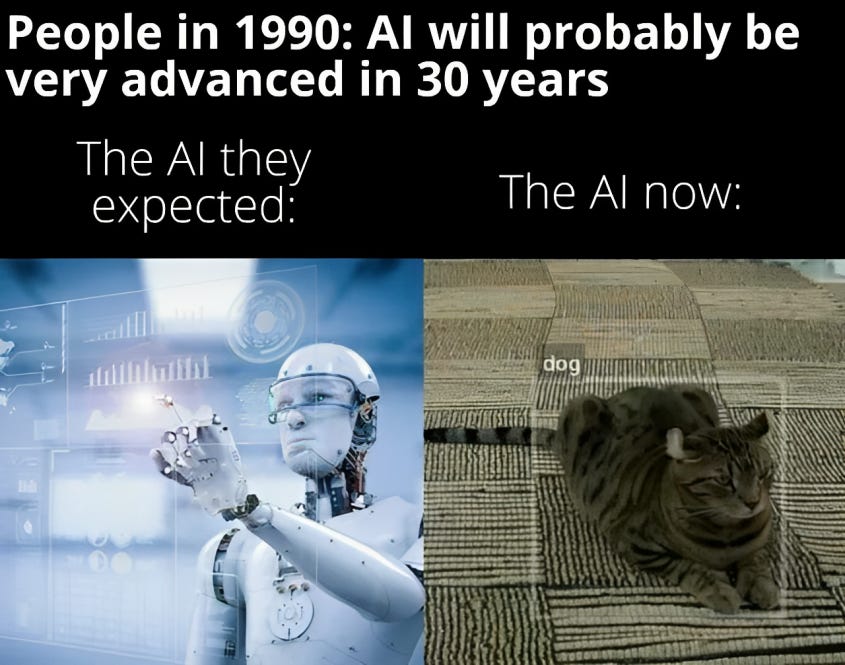

Meme of the week!

Meme shared by rucha8062

Featured Community post from the Discord

rmarquet has recently released a Streamlit component for text annotation. Using this tool, users can highlight text, add annotations, and categorize them under various references. Whether you're engaged in natural language processing, machine learning, or other text-based projects, this component proves invaluable in efficiently annotating and organizing your data. Check it out on GitHub and support a fellow community member. Share your feedback and questions in the thread here.

AI poll of the wee

Join the discussion on Discord.

TAI Curated section

Article of the week

From Overfitting to Excellence: Harnessing the Power of Regularization by Sandeepkumar Racherla

Overfitting is a problem that occurs in Machine Learning that is due to a model that has become “too specialized and focused” on the particular data it’s been trained and can’t generalize well. Overfitting, as well as underfitting, is something that we absolutely need to avoid. A way to avoid overfitting is through regularization.

Our must-read articles

Exploring Julia Programming Language: MongoDB by Jose D. Hernandez-Betancur

How To Build ML Pipelines, Visually & Fast! by Omer Mahmood

Introduction to Audio Machine Learning by Sujay Kapadnis

If you want to publish with Towards AI, check our guidelines and sign up. We will publish your work to our network if it meets our editorial policies and standards.

Job offers

Junior Software Engineer (Financial Services/Fintech) @Monta (Copenhagen, Denmark)

Senior Software Engineer @ConvertKit (Remote)

Data Analyst @CloudHire (Remote)

Research Scientist, Frontier Red Team @Anthropic (San Francisco, CA, USA)

Data Scientist @Eram Talent (Dhahran, Saudi Arabia/Freelancer)

QA Engineer @Uniphore (Herzliya, Israel)

Data Science Intern @Coefficient (London, UK)

Interested in sharing a job opportunity here? Contact sponsors@towardsai.net.

If you are preparing your next machine learning interview, don’t hesitate to check out our leading interview preparation website, confetti!

This AI newsletter is all you need #60 was originally published in Towards AI on Medium, where people are continuing the conversation by highlighting and responding to this story.