TAI #177: Can AI Introspect? Anthropic’s New Study Offers a Glimmer of Self-Awareness

Also, OpenAI’s for-profit transition, a $700bn capex surge, and new open-weight models from China.

What happened this week in AI by Louie

The AI industry’s relentless pace of commercialization was again on full display this week. OpenAI has officially completed its corporate transition and paved the way for a future IPO, with the OpenAI Foundation now in control of a simplified, for-profit public benefit corporation. OpenAI also continued its deal-making, securing $250 billion of GPU rentals from Microsoft and $38 billion from Amazon. Simultaneously, Big Tech earnings calls signaled yet another escalation in AI capital expenditure, which is now expected to surpass $650 billion across the industry in 2026 (including an increasing amount via third-party data center developers). We also saw a flurry of ambitious new open-weight models from China, including MiniMax M2 and Kimi’s Linear LLM, as well as new custom coding models from Windsurf and Cursor that also look to utilize Chinese open-weight models.

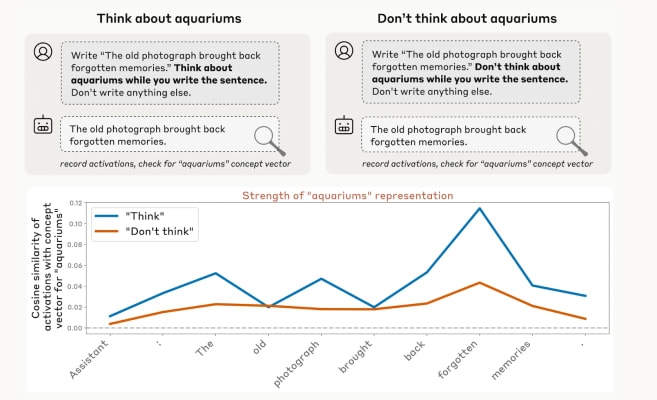

Amid this frenzy of investment and releases, we were particularly interested in a new study from Anthropic on AI introspection. We are big fans of the interpretability work at Anthropic and think other labs are underinvesting in this area. This latest research offers some of the first causal evidence that models like Claude can, to a limited extent, monitor their own internal states. The core of their experiment is a technique called “concept injection.” Researchers first identify the neural activity pattern, or vector, for a specific concept, such as “ALL CAPS.” They then inject this vector into the model’s activations while it’s processing an unrelated prompt and ask the model if it notices anything unusual.

The results are surprising. In successful trials, the model reports detecting an “injected thought” related to “LOUD” or “SHOUTING” before its external behavior is obviously biased by the concept. This suggests the model is genuinely noticing an anomaly in its own internal processing. However, this capability is highly unreliable; even their best-performing model, Claude Opus 4.1, only demonstrated this kind of awareness in about 20% of trials. The effect also depends on a “sweet spot” for injection strength—too weak and the model doesn’t notice, too strong and it produces hallucinations. One of the more peculiar findings was that “helpful-only” variants of some models performed better at introspection than their production counterparts, suggesting that the fine-tuning process can either elicit or suppress this ability.

In another clever experiment, researchers tested if a model could check its own “intentions.” They forced a model to output an out-of-context word, like “bread,” and then asked if it was an accident. Normally, the model would apologize. But when they retroactively injected the “bread” concept into the model’s earlier activations—making it seem as if the model had been “thinking” about bread all along—the model changed its story, claiming it had meant to say “bread” and even confabulating a plausible reason. This suggests a process more complex than the model re-reading its output; it appears to be referring back to its own prior neural activity to judge its actions.

Why should you care?

While any study that verges on the subject of consciousness is bound to stir controversy, the practical implications of this work are what stand out. The fact that a model can, even unreliably, detect a foreign signal in its own thought process is a significant finding. It opens up the possibility of building systems where models can self-audit for anomalies, prompt injections, and intent/output mismatches. The next step is seeing whether a model can learn to recognize the internal state that precedes a hallucination or an off-policy answer. If so, it could flag uncertainty, switch to a cite-only mode, or even refuse to answer—a concrete step toward greater reliability.

This research also provides a mental model for how to work with these systems. A deeper understanding of what happens inside these black boxes has immense practical value for building with and using these models more effectively. LLMs aren’t simple input-output machines; they maintain internal representations and, to some extent, their own processing state. The study found that models could even exert a degree of control over these states when instructed. This suggests our instructions can shape both the model’s output and its internal “focus.” Introspection, for example, could be another promising avenue for an AI to identify when it is hallucinating, versus when it is reciting memorized knowledge, versus when it is sourcing information from its context.

For anyone building complex agents or workflows, that’s a crucial insight: we can design prompts, system policies, and agent loops that guide the model’s internal reasoning process, not just its final answer. The capability is nascent and fragile, but the fact that the most capable models performed best—and that post-training settings matter—suggests it may firm up over time. Treat introspection as a weak but useful signal to gate risky actions and debug failures. The ability to ask an AI what it’s thinking, and get a somewhat truthful answer, could become a practical tool for building safer and more transparent systems.

— Louie Peters — Towards AI Co-founder and CEO

We are super excited to share another guest post, this time in ‘The System Design Newsletter’ with Neo Kim covering everything you need to know to understand how LLMs like ChatGPT actually work.

It is a plain-English field guide to that vocabulary and the foundations of this field. You don’t need the math, the specific prompts, or the coding skills; you just need to know what these terms mean in practice so you can get better results, spot failure modes, and make sensible choices about models and settings.

Read the full article here:

Hottest News

1. AnySphere Released a New Version of Cursor and Its First Proprietary Model

AnySphere has released Cursor 2.0 alongside Composer, its first in-house “frontier” coding model, designed for low-latency, agentic programming workflows. It is advertised as roughly four times faster than similarly capable models, with most interactions completing in under 30 seconds. The Composer is trained with tools such as codebase-wide semantic search to better handle large repositories. Cursor 2.0 introduces a multi-agent interface that allows developers to run multiple agents in parallel, review and test their changes more easily, and even compare multiple models against the same problem before selecting the best result.

2. Anthropic Research on LLM Introspection

Anthropic’s new interpretability study on Claude models presents evidence that advanced language models can sometimes detect and report on their own internal states when probed with “concept injection” techniques, such as noticing an injected representation of “all caps” or a prefilled anomalous word before it obviously influences output. The work finds that introspective behavior remains unreliable—Claude Opus 4.1 shows this kind of awareness in only about 20% of trials—but that more capable models (Claude 4 and 4.1) perform best, suggesting introspection could improve with scale and potentially become a tool for debugging, safety, and transparency, even as the authors caution that it does not imply human-like consciousness.

3. MiniMax Releases MiniMax M2

MiniMax has open-sourced and commercially launched MiniMax M2. This 230B-parameter MoE model activates only around 10B parameters per request, targeting end-to-end coding and agentic workflows with near-frontier quality at a fraction of the usual cost. The company positions M2 as a model “born for Agents and code,” highlighting strong tool use and deep search, competitive coding performance versus leading overseas models, and API pricing set at roughly 8% of Claude 3.5 Sonnet’s while running about twice as fast; the model is available via API, as a free-for-now MiniMax Agent product, and as open-weight checkpoints on Hugging Face for local deployment with vLLM or SGLang.

Moonshot AI has unveiled Kimi Linear, a 48B-parameter hybrid linear-attention architecture with approximately 3B activated parameters per forward pass, aiming to replace traditional full attention in large language models. Built around Kimi Delta Attention (a refined Gated DeltaNet variant) and interleaving three linear-attention layers with one full-attention layer, Kimi Linear reportedly matches or outperforms strong full-attention baselines across short- and long-context tasks and RL-style post-training, while cutting KV-cache memory use by up to 75% and achieving up to 6× higher decoding throughput at 1M-token context; the team has also open-sourced kernels, vLLM support, and model checkpoints.

5. OpenAI Completes Its For-Profit Recapitalization

OpenAI has completed a major recapitalization that simplifies its structure by placing a newly named OpenAI Foundation firmly in control of the for-profit business, now organized as OpenAI Group PBC. Under the new structure, the OpenAI Foundation will own 26% of the for-profit, with a warrant for additional shares to be granted if the company continues to grow. Microsoft, an early investor in OpenAI, will hold a roughly 27% stake, valued at about $135 billion, with the remaining 47% held by investors and employees.

6. GPT-5 Pro Suggests Novel Drug Repurposing

In a widely discussed medical case, immunologist Derya Unutmaz reported that OpenAI’s GPT-5 Pro independently suggested repurposing the IL-4Rα-blocking drug dupilumab to treat a patient with food protein–induced enterocolitis syndrome (FPIES), a rare food allergy considered effectively untreatable, with the same treatment later supported by a peer-reviewed study. While clinicians stress that such AI-generated hypotheses still require rigorous human-led validation and trials, the episode is being cited as an early example of LLMs acting as scientific collaborators capable of surfacing plausible therapeutic strategies in minutes.

7. IBM AI Team Releases Granite 4.0 Nano Series

IBM has launched Granite 4.0 Nano, a family of ultra-small language models (350M and ~1.5B parameters in both hybrid-SSM and transformer variants) designed for edge and on-device use. All models are released under Apache 2.0 and support runtimes such as vLLM, llama.cpp, and MLX. Trained on over 15T tokens with the same upgraded pipelines as the wider Granite 4.0 family, these models carry IBM’s ISO 42001 certification for responsible development and reportedly outperform similarly sized models such as Qwen and Gemma across knowledge, math, code, safety, and agentic benchmarks like IFEval and Berkeley’s function-calling leaderboard.

8. OpenAI Launches Aardvark Agent

OpenAI has introduced Aardvark, a GPT-5–powered “agentic security researcher” that continuously analyzes codebases to build threat models, scans new commits, validates suspected vulnerabilities in sandboxed environments, and proposes patches via integration with OpenAI Codex and GitHub workflows. In internal and partner testing, Aardvark reportedly identified 92% of known and synthetic vulnerabilities in benchmark repositories and has already uncovered multiple issues in open-source projects, including at least ten that received CVE identifiers. The system is now available in a private beta, focusing on improving detection accuracy and the developer experience.

Five 5-minute reads/videos to keep you learning

1. Training your reasoning models with GRPO: A practical guide for VLMs Post Training with TRL

To enhance the reasoning capabilities of Vision-Language Models (VLMs), this article details the application of Group Relative Policy Optimization (GRPO). This reinforcement learning method is used for post-training models, encouraging them to generate an explicit thought process before providing a final answer. It explains the GRPO algorithm, which relies on reward functions to evaluate both output format and accuracy. A practical code walkthrough shows how to implement this technique using Hugging Face’s TRL library and LoRA for efficient fine-tuning on a Qwen2 model, aiming to improve model transparency in complex reasoning tasks.

2. Agent Context Engineering: Teaching AI Systems to Build Their Own Playbooks

To address the limitations of traditional AI adaptation, a framework known as Agentic Context Engineering (ACE) enables systems to learn by evolving their context rather than retraining model weights. This approach treats context as a structured, expanding playbook, using a three-part architecture of a Generator, Reflector, and Curator to process tasks, analyze results, and integrate lessons. By applying incremental updates, ACE avoids the information decay common in other methods. The framework has demonstrated improved performance on complex benchmarks, presenting an efficient and interpretable alternative for continuous AI improvement.

3. MLOps Mastery: Seamless Multi-Cloud Pipeline with Amazon SageMaker and Azure DevOps

This article outlines an effective multi-cloud MLOps strategy for enterprises leveraging both AWS and Azure. It combines Azure DevOps for CI/CD orchestration and code governance with Amazon SageMaker for the complete machine learning lifecycle. The workflow begins in Azure DevOps, which triggers a SageMaker pipeline in a development AWS account for training and registration. A key feature is the secure promotion of the validated model across separate staging and production accounts for deployment. This approach emphasizes security through services like AWS IAM and KMS while enabling continuous monitoring for production stability and compliance.

4. How to Use Sparse Vectors to Power E-commerce Recommendations With Qdrant

Sparse vector retrieval offers a method for improving e-commerce search by combining the keyword precision of lexical search with the semantic understanding of dense models. The article details the implementation of this technique using the Qdrant vector database and highlights sparse neural models such as SPLADE. It compares retrieval methods, showing how sparse vectors deliver more relevant results. Furthermore, it proposes a hybrid approach that fuses sparse and dense vectors to build a robust, multilingual search system, resulting in more precise and context-aware product discovery for a global user base.

5. Tree-GRPO Cuts AI Agent Training Costs by 50% While Boosting Performance

A new method, Tree-Group Relative Policy Optimization (Tree-GRPO), addresses the high cost and inefficiency of training AI agents for multi-step tasks. Instead of sampling independent action chains, it builds a tree structure where branches share common starting points. This allows the model to learn from step-by-step decisions by comparing sibling branches, effectively creating detailed process supervision from only final outcome rewards. Results show this approach reduces training costs by about 1.5 times while simultaneously boosting performance, particularly on complex question-answering and web navigation tasks. The method also demonstrates significant benefits for smaller models.

Repositories & Tools

1. Glyph is a framework for scaling the context length through visual-text compression.

Top Papers of The Week

1. Do Generative Video Models Understand Physical Principles?

This paper presents Physics-IQ, a comprehensive benchmark dataset that can only be solved by acquiring a deep understanding of various physical principles, including fluid dynamics, optics, solid mechanics, magnetism, and thermodynamics. It finds that across a range of current models (Sora, Runway, Pika, Lumiere, Stable Video Diffusion, and VideoPoet), physical understanding is severely limited and unrelated to visual realism.

2. DRBench: A Realistic Benchmark for Enterprise Deep Research

This paper introduces DRBench, a benchmark and runnable environment for evaluating “deep research” agents on open-ended enterprise tasks that require synthesizing facts from both public web and private organizational data into properly cited reports. Unlike web-only testbeds, DRBench stages heterogeneous, enterprise-style workflows—files, emails, chat logs, and cloud storage—so agents must retrieve, filter, and attribute insights across multiple applications before writing a coherent research report.

3. Every Activation Boosted: Scaling General Reasoner to 1 Trillion Open Language Foundation

This paper introduces Ling 2.0, a reasoning-based language model family built on the principle that each activation should directly translate into stronger reasoning behavior. It is one of the latest approaches that demonstrates how to maintain activation efficiency while transitioning from 16B to 1T without rewriting the recipe. The series has three versions: Ling mini 2.0 at 16B total with 1.4B activated, Ling flash 2.0 in the 100B class with 6.1B activated, and Ling 1T with 1T total and about 50B active per token.

OpenAI shares gpt-oss-safeguard, a pair of open-weight safety reasoning models that interpret developer-provided policies at inference time: classifying messages, completions, and full chats with a reviewable chain-of-thought to explain decisions. Released in research preview in 120B and 20B variants (Apache-2.0), they’re fine-tuned from GPT-OSS and evaluated in a companion technical report on baseline safety performance.

Quick Links

1. Google pulls Gemma from AI Studio after a U.S. senator accused the AI model of fabricating accusations of sexual misconduct against her. In a Friday night post on X, Google did not reference the specifics of Blackburn’s letter, but the company said it’s “seen reports of non-developers trying to use Gemma in AI Studio and ask it factual questions.”

2. OpenAI reached a deal with Amazon to buy $38 billion in cloud computing services over the next seven years. OpenAI announced that it will immediately begin using AWS compute, with all capacity targeted for deployment by the end of 2026. The company also plans to expand further into 2027 and beyond.

Who’s Hiring in AI

Senior AI Applied Scientist @Microsoft Corporation (Redmond, WA, USA)

Junior AI Engineer (LLM Development and Technical Writing) @Towards AI Inc (Remote)

Automation Engineer (AI-Enabled Workflows) - Contract @Goodway Group (Remote)

Forward Deployed Engineer, AI @MongoDB (Remote/USA)

Summer 2026 Applied Gen AI/Data Scientist Graduate Intern @Highmark Health (Multiple US locations)

AI Automations Cloud Deployment Engineer @RTX Corporation (Remote/USA)

Interested in sharing a job opportunity here? Contact sponsors@towardsai.net.

Think a friend would enjoy this too? Share the newsletter and let them join the conversation.