TAI #175: Anthropic's "Agent Skills" Offers a New Blueprint for Building with AI

Also, Deepmind Cell2Sentence-Scale’s discovery, Haiku 4.5, Veo 3.1 and more

What happened this week in AI by Louie

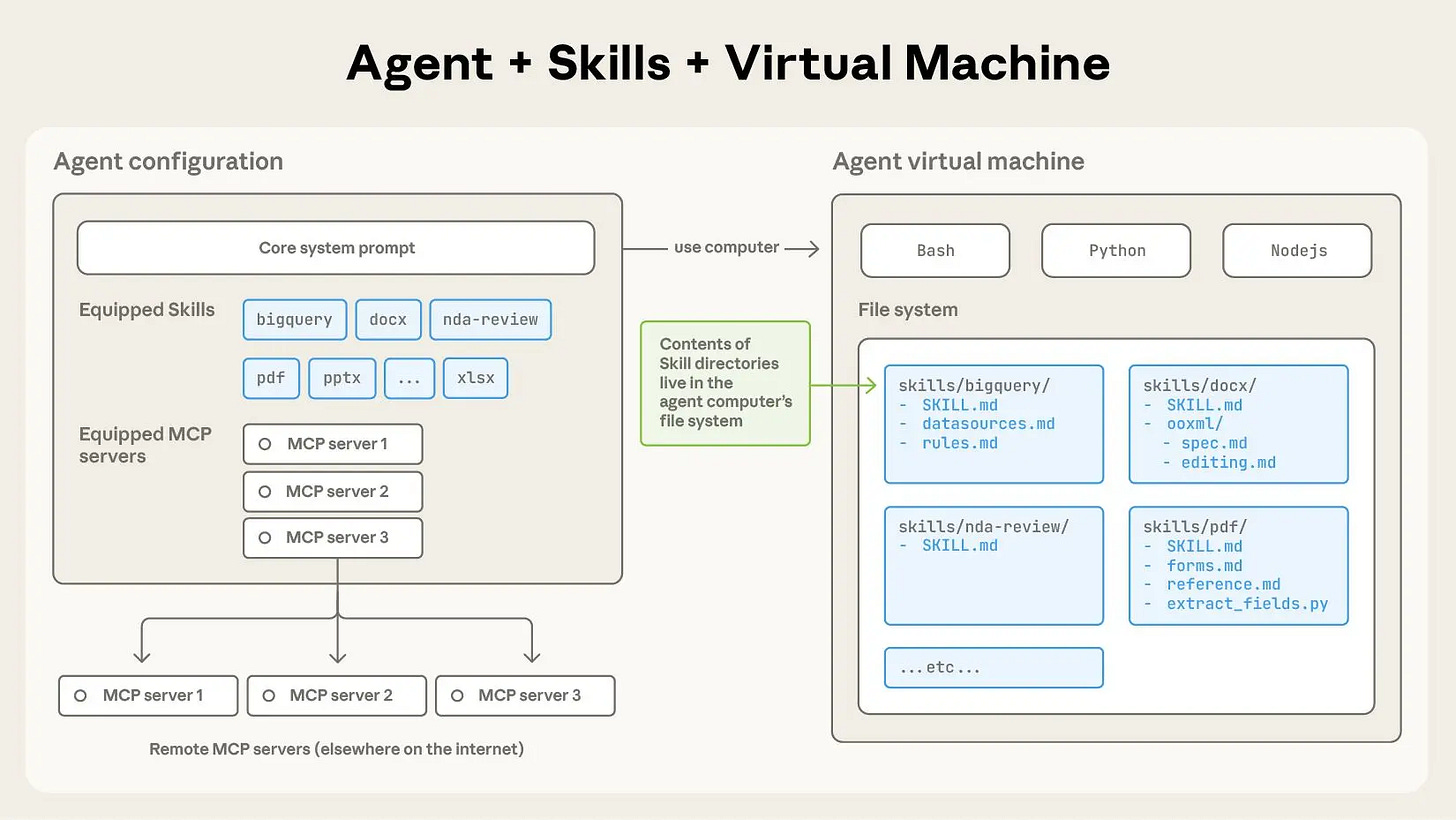

After a few weeks of platform-level announcements from OpenAI and Google, Anthropic entered the fray with a different, more granular approach to building capable agents. The company introduced Agent Skills, a new way to equip Claude with specialized, procedural knowledge using a simple system of files and folders. While OpenAI’s AgentKit focuses on visual orchestration, Anthropic’s Skills feel like a targeted, developer-first solution to one of the stickiest problems in agentic AI: how to give a model deep, reusable expertise without overwhelming its context window.

At its core, an Agent Skill is an organized folder containing instructions, scripts, and other resources that Claude can discover and load dynamically. It’s a bit like creating a detailed onboarding guide for a new hire. Instead of building fragmented, one-off agents for each task, developers can package domain knowledge into composable “expertise packages.” For example, Anthropic has pre-built skills for common document tasks like creating and editing PowerPoint, Excel, and PDF files.

Source: Anthropic

The design behind Skills is built on a principle called progressive disclosure. When an agent starts, it doesn’t load every skill in its entirety. Instead, it only loads a small piece of metadata—a name and a brief description—for each available skill. This lightweight “table of contents” allows Claude to know what skills exist and when they might be relevant. Only when a user’s request triggers a specific skill does the agent load the main instruction file. If that file references other resources, like executable scripts or detailed documentation, those are loaded on an as-needed basis. This multi-level loading mechanism means the amount of context that can be bundled into a skill is effectively unbounded, a clever workaround to the finite real estate of an LLM’s context window.

For instance, Anthropic’s PDF Skill includes a core overview, form-filling instructions in a separate file, and a Python script for extracting fields, executed deterministically outside the context window for efficiency and reliability. This hybrid approach—combining natural language instructions with code—tackles tasks where LLMs excel at reasoning but falter in precision, such as sorting lists or manipulating documents, reducing costs compared to token-based generation.

Why should you care?

Anthropic’s Agent Skills represent a new and compelling paradigm for building with LLMs, one that blurs the line further between prompt engineering and traditional software development. A “skill” is not just a prompt; it is a version-controlled, reusable asset that can contain both natural language guidance and deterministic, executable code. This hybrid approach allows developers to use Claude for what it does best—reasoning and navigating ambiguity—while offloading precise, error-prone operations to reliable scripts.

This is a very big deal for enterprise adoption and the monetization of AI (including Anthropic’s own target to grow revenue another 5x to $26bn by the end of 2026!). The real value of AI in a business context will lie in its ability to execute specific, proprietary workflows. A company’s unique method for financial reporting, its customer support playbook, or its brand guidelines for marketing copy are all forms of procedural knowledge. Agent Skills provide a clear framework for capturing this knowledge and making it available to an AI agent. This makes the agent far more valuable and “sticky,” creating a defensible moat built on organizational expertise rather than just access to a powerful foundation model. It also opens a pathway for individuals and companies to package and potentially sell their own expertise as ready-to-use skills.

Of course, the approach is not without its challenges. The biggest is security; giving an AI agent the ability to execute code from a folder introduces significant risks if the skill comes from an untrusted source. Agent reliability is also still currently an issue on many (if not most) tasks. There is also a learning curve involved in designing skills that are both effective and efficient. However, the potential upside is enormous. By providing a structured, scalable way to augment a general-purpose AI with specialized knowledge, Anthropic is offering a practical blueprint for how the much-hyped “agent economy” might actually be built.

— Louie Peters — Towards AI Co-founder and CEO

Hottest News

Anthropic Introduces Agent Skills

Skills are folders of instructions, scripts, and resources that agents can discover and load dynamically to perform better at specific tasks. Claude loads these on demand for specialized tasks, using progressive disclosure to manage context efficiently. They support code execution and assets, with iterative building recommended. Available in Claude.ai, Code, and API.

Claude + your productivity platforms

Anthropic detailed new and expanded connectors that bring Claude into common productivity stacks (e.g., Workspace/365-style platforms) and emphasized using Agent Skills inside those environments. The idea is to reduce copy-paste, act on docs and tasks where they live, and keep enterprise controls in place.

Google’s AI Cracks a New Cancer Code.

Google DeepMind unveiled its 27-billion-parameter AI model, Cell2Sentence-Scale, which identified a drug combination enhancing cancer tumors’ immune visibility. Collaborating with Yale, DeepMind confirmed silmitasertib boosts antigen presentation in immune-active settings, marking a milestone in AI-driven biological discovery. This breakthrough paves the way for innovative cancer therapies, pending further experimentation and clinical validation.

Anthropic Introduces Claude Haiku 4.5.

Anthropic released Claude Haiku 4.5, offering enhanced coding performance at reduced cost and increased speed, surpasses Claude Sonnet 4 in specific tasks. Available via Claude Code and major platforms, it serves as a cost-efficient alternative for developers, enhancing AI applications like chat assistants and coding agents.

Veo 3.1 and new creative capabilities in the Gemini API.

Google released Veo 3.1, offering improved video generation through the Gemini API. The model enhances audio quality, narrative control, and image-to-video capabilities. New features include reference image guidance, scene extension, and frame transition control.

DeepMind teams with CFS on fusion control AI

DeepMind announced a research partnership with Commonwealth Fusion Systems (CFS) to apply AI to plasma control in SPARC, with the ambition to help reach breakeven. The collaboration centers on using learning-based controllers to keep plasma stable at >100M°C within the device’s limits

Five 5-minute reads/videos to keep you learning

Just Talk To It - the no-bs Way of Agentic Engineering. The author emphasizes the efficiency of agentic engineering with gpt-5-codex, which automatically generates code, replacing traditional methods. Codex, preferred over Claude Code, excels in speed and context efficiency, offering advantages like message queuing and seamless processing. The author prioritizes a balance between AI and manual input, endorsing an intuitive interaction with AI to enhance software development without overcomplicating workflows.

More Articles Are Now Created by AI Than Humans. AI-generated articles on the web have surpassed human-written ones but plateaued since May 2024. Despite improvements, these articles rarely appear in search results. Using CommonCrawl data and Surfer’s AI detector, researchers found a 4.2% false positive and 0.6% false negative rate in article classification, assessing AI articles’ prevalence since November 2024.

The Multiplication Law of Wealth: From Compound Interest Mathematics to the Reinforcement Learning Essence of Human Behavior By Shenggang Li

This analysis presents wealth inequality as a natural outcome of mathematical laws rather than social chance. The author posits that wealth grows multiplicatively, where small, persistent advantages in returns compound into significant disparities over time. The discussion covers how factors like compounding frequency and volatility impact long-term growth. This process is compared to reinforcement learning, with financial outcomes acting as feedback that shapes behavior. The piece extends this compounding principle beyond finance to other life domains like knowledge and health, identifying it as a universal pattern for growth and decline.

How Anthropic Trained Claude Sonnet and Opus Models: A Deep Dive By M

An in-depth look at how Anthropic trained its Claude models reveals a multi-stage approach to AI alignment. The company initially used Reinforcement Learning from Human Feedback (RLHF) with Proximal Policy Optimization (PPO) to balance helpfulness and harmlessness. A significant development was “Constitutional AI,” where the model learns to self-critique based on a set of principles, reducing the need for direct human feedback. It also employed an “iterated online” training method for continuous improvements, demonstrating that this alignment training can enhance a model’s overall capabilities, not just its safety.

From Correlation to Causation: Making Marketing Mix Models Truly Useful By Torty Sivill

To make Marketing Mix Models (MMMs) more actionable, this analysis moves beyond correlation to demonstrate how causal inference can be applied. The author explains that traditional MMMs often struggle to provide clear guidance for spending decisions. The piece then details three specific methods to improve these models: using Instrumental Variables to correct for demand-reactive channels, applying counterfactual time series modeling to measure campaign lift accurately, and leveraging Directed Acyclic Graphs (DAGs) to encode business knowledge and adjust for confounders. These techniques help create more reliable models for strategic resource allocation.

Why and How We t-Test By Ayo Akinkugbe

When analyzing experimental data, it’s crucial to determine if observed differences are genuine effects or simply random noise. This guide to significance testing clarifies the role of the p-value in evaluating statistical evidence. It covers various methods, including the t-test for comparing two groups, ANOVA for multiple groups, and the Chi-square test for categorical data. The author also stresses looking beyond the p-value by using effect sizes to measure a difference’s magnitude and confidence intervals to gauge its reliability, offering a more complete approach to interpreting results.

Building End-to-End Machine Learning (ML) Lineage for Serverless ML Systems By Kuriko Iwai

To address the complexities of traceability in serverless machine learning, a method for building a complete ML lineage on AWS Lambda is detailed. The system utilizes DVC to version data, models, and predictions across a multi-stage pipeline that includes data processing, drift detection, model tuning, and fairness assessment. This entire workflow is automated for scheduled execution using Prefect, with all artifacts stored on AWS S3. The guide demonstrates how the final application can access these versioned components, ensuring reproducibility and robust tracking throughout the ML lifecycle.

Repositories & Tools

karpathy/nanochat. Andrej Karpathy’s Nanochat offers a full-stack LLM implementation similar to ChatGPT for $100, providing a customizable and hackable solution. Running on an 8XH100 node, it delivers a model with 32 layers and 1.9 billion parameters, surpassing GPT-2.

Top Papers of The Week

When Models Lie, We Learn: Multilingual Span-Level Hallucination Detection with PsiloQA. Researchers introduce PsiloQA, a large multilingual dataset identifying span-level hallucinations in language models, covering 14 languages. Created through a three-stage automated pipeline, PsiloQA evaluates hallucination detection methods and finds encoder-based models most effective.

Diffusion Transformers with Representation Autoencoders. Researchers introduced Representation Autoencoders (RAEs) for Diffusion Transformers, enhancing image generation quality. By employing pretrained representation encoders paired with trained decoders, RAEs create semantically rich latent spaces. This innovation enables faster convergence and effective operation in high-dimensional spaces.

QeRL: Beyond Efficiency - Quantization-enhanced Reinforcement Learning for LLMs. Researchers propose QeRL, a framework combining NVFP4 quantization with Low-Rank Adaptation for large language models, achieving over 1.5 times speedup in reinforcement learning rollout. QeRL enables the training of a 32B LLM on a single H100 GPU, delivering faster reward growth, higher accuracy, and effective exploration through adaptive quantization noise on benchmarks like GSM8K and MATH 500.

Spatial Forcing: Implicit Spatial Representation Alignment for Vision-language-action Model. Spatial Forcing (SF) enhances vision-language-action (VLA) models by aligning visual embeddings with geometric representations, eliminating the need for explicit 3D inputs. SF improves spatial awareness and action precision, outperforming both 2D and 3D VLAs. This technique accelerates training by up to 3.8 times and boosts data efficiency in diverse robotic tasks, achieving state-of-the-art results.

Quick News

Anthropic targets $26 billion revenue by 2026 on strong AI demand. Anthropic targets a $26 billion revenue by 2026, driven by the strong demand for AI products.

Claude Code comes to the web (beta)

Anthropic introduced a browser-based Claude Code experience where you can dispatch multiple coding tasks to Anthropic-managed sandboxes and monitor progress. It integrates with GitHub, supports parallel task execution across repositories, and exposes network/file system restrictions plus allow-listing of domains.

OpenAI is relaxing mental health driven safeguards to restore ChatGPT’s enjoyable personality, enabling emoji use and friend-like behavior in a new version, with erotica for verified adults in December via age-gating. This apparently balances safety with utility after initial restrictions reduced engagement.

Karpathy explains AGI is a decade away due to LLMs’ lack of continual learning, multimodality, and memory, critiquing RL’s inefficiency and proposing human-like synthetic data generation. He discusses agent limitations and education’s future in AI. The interview also covers self-driving delays and ASI impacts.

Who’s Hiring in AI

Lead Group Product Manager, Generative AI, Google Cloud @Google (New York, USA)

Senior Human-AI Collaboration Research Engineer, Education Labs @Anthropic (San Francisco, CA, USA)

Software Engineer, Product @Meta (Multiple US Locations)

Intern - AI Enablement and Technology Transformation @Lumen (Remote/USA)

AI Research Engineer / Orlando, FL @Lockheed Martin (Orlando, FL, USA)

Senior Software Developer, AI Services @Range (Toronto, Canada)

Principal Enterprise AI Engineer @Riot Games (Los Angeles, CA, USA)

Interested in sharing a job opportunity here? Contact sponsors@towardsai.net.

Think a friend would enjoy this too? Share the newsletter and let them join the conversation.