TAI #173: OpenAI's DevDay Deluge: Sora 2, AgentKit, and an App Store Reboot

Also, Thinking Machines Tinker for Fine-Tuning, Deepseek V3.2, GLM-4.6, ChatGPT hits 800 million users & more

What happened this week in AI by Louie

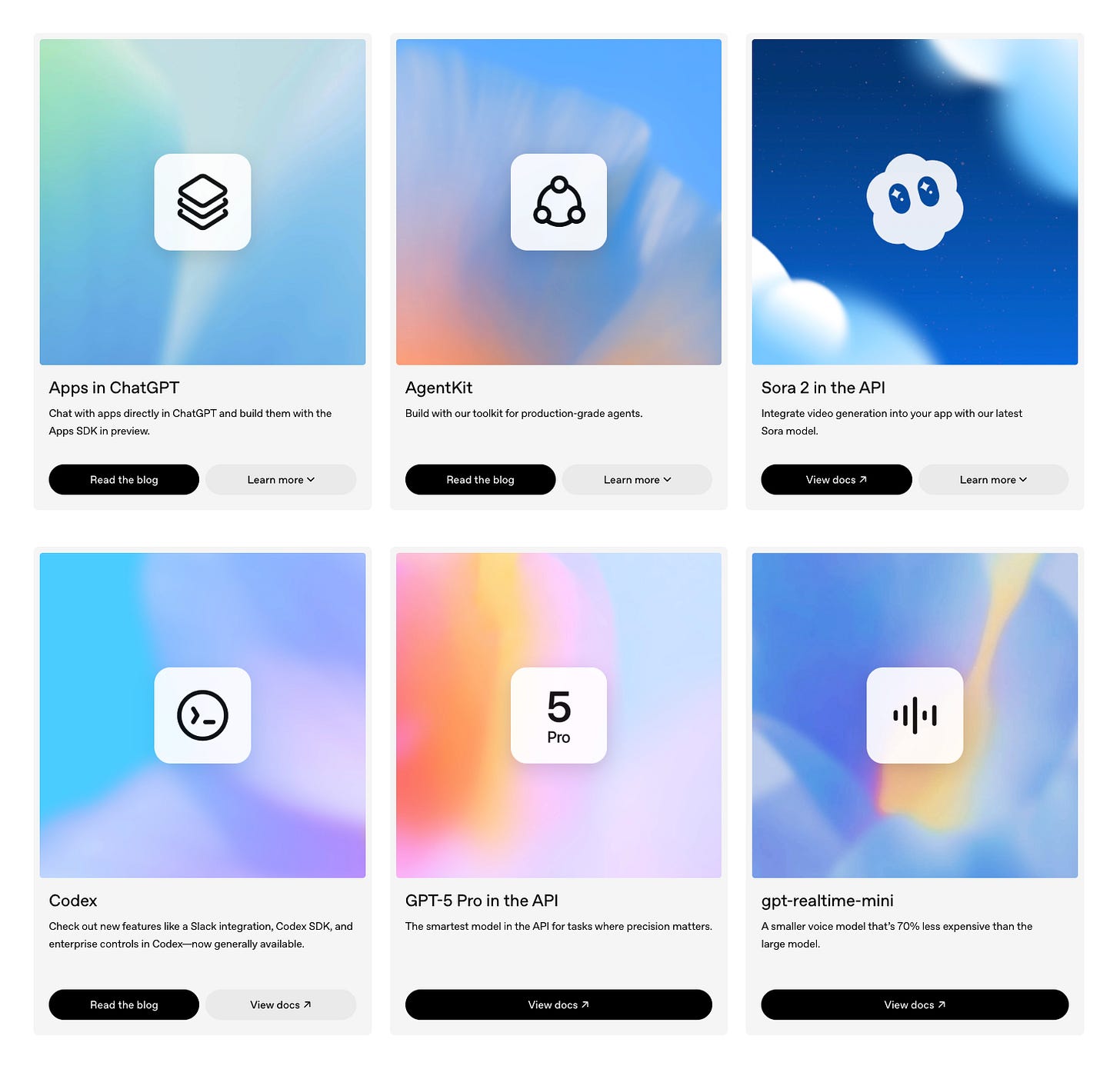

This week was dominated by a deluge of product releases from OpenAI, culminating in its 2025 DevDay. The first big splash came with the release of Sora 2, which quickly captured viral attention. While it has different strengths and weaknesses compared to Google’s Veo 3, OpenAI’s new video model is even more affordable and now also includes native audio generation. However, the main event was DevDay, where OpenAI unveiled a suite of new tools aimed at building a full-fledged ecosystem around its models, including a visual agent builder and a reboot of its platform strategy for ChatGPT.

OpenAI has a mixed record with product releases — the GPT Store, for instance, never quite gained the momentum many expected — so it’s hard to tell which of these new offerings will catch on. The scale of ambition is undeniable, however, underscored by new metrics revealed on DevDay: ChatGPT now has an incredible 800 million weekly active users, 4 million people are building with the API, and the API platform is processing 6 billion tokens per minute.

The new Sora 2 model, which OpenAI is positioning as the “GPT-3.5 moment for video,” demonstrates impressive progress in creating realistic, physically plausible video with integrated sound. The API offers a fast Sora-2 model for quick iteration and a higher-quality Sora-2 Pro for production work. Its pricing is aggressive, with the standard 720p model at just $0.10 per second — roughly four times cheaper than Google’s Veo 3 standard tier ($0.40/sec). This will undoubtedly accelerate the adoption of generative video in everything from memes to marketing to entertainment.

The bigger story from the week was OpenAI’s clear push to build the entire platform, not just the underlying models. DevDay introduced two pillars for this strategy. The first is AgentKit, a comprehensive toolkit featuring a visual, drag-and-drop “Agent Builder,” positioning it as a direct competitor to no-code orchestration platforms like n8n. The second, and perhaps more telling pillar, is a reboot of its app strategy with “Apps in ChatGPT.” Powered by an open-standard Apps SDK, it allows services like Spotify and Zillow to build interactive experiences directly into the chat interface, a fresh attempt at the conversational OS vision where the GPT Store fell short.

Alongside these platform plays, OpenAI also rolled out a suite of new API models, giving developers more granular control over the cost-capability trade-off. This includes the high-end GPT-5 Pro, aimed at the most demanding reasoning tasks but at a very steep price ($15 per million input tokens, $120 per million output). I am now personally using GPT-5-Pro in ChatGPT for a large proportion of my most complex AI tasks, but this price point is quite prohibitive for integrating it into most of our AI agent and workflow development projects.

At the other end of the spectrum, new, highly efficient models like gpt-realtime-mini promise to make production voice agents far more economical, with audio processing costs roughly 70% lower than the full gpt-realtime model.

Why should you care?

Sam Altman has played his hand. With these new products, a clear platform ambition, and an aggressive expansion plan for 23 GW of AI data centers — worth around $1.1 trillion in capital expenditures, including a new deal with AMD — the strategy is clear. His bet is that scale, both of compute access and of active users and platform developers, is the only durable moat in the AI race. He hopes this will lead to the best models, the most inference capacity for advanced features, attract top AI talent (offering more GPUs per engineer), and the most vibrant development ecosystem. His broader internal target of 250GW by 2033, which would require over $10 trillion in capital expenditure, is a gauntlet thrown down. Now it’s Google, Amazon, Elon Musk, Mark Zuckerberg, Microsoft, and China’s turn to decide if they want to stay in the race.

For developers and businesses, this is a double-edged sword. On one hand, the prospect of building for 800 million users via the new Apps SDK is a massive opportunity. Tools like AgentKit could also dramatically reduce the complexity and time required to build sophisticated, multi-step agentic workflows. On the other hand, by bundling these tools, OpenAI is moving into direct competition with a whole ecosystem of startups that have built businesses on top of its APIs. Developing directly on OpenAI’s new SDKs also makes it harder to integrate multiple models from many AI labs into your system — and we still personally find that AI labs have different strengths and weaknesses relevant to different tasks. The success of this platform play is far from guaranteed. Building a thriving developer ecosystem demands a sustained commitment to product and stable monetization, areas where OpenAI has stumbled before. The use of an open standard like MCP for the new Apps SDK suggests they may have learned some lessons, but the skepticism is warranted.

For now, the most immediate impact is that the tools for building complex AI systems are becoming more competitive and accessible. However, the real story is the sheer scale of the industrial mobilization that is just beginning. The contracts needed to deliver just the first 23GW will require a vast network of partners for everything from electricity generation to cooling equipment and financing. As this build-out gets underway, we expect a response from the other AI labs, including DeepMind, with rumors of a new Gemini 3.0 already circulating. The AI industrial revolution is just getting started.

— Louie Peters — Towards AI Co-founder and CEO

Hottest News

OpenAI announced Sora 2, a new video-and-audio generation model positioned as a step up in physical realism, synchronized audio, steerability, and stylistic range, alongside a system card that explains an invite-based rollout and safety constraints (e.g., tighter policies around likeness use and minors, restricted uploads, and iterative deployment). OpenAI says Sora 2 will be accessible on sora.com, via a standalone iOS app, and later through an API; the system card frames safeguards and provenance as central to launch.

2. OpenAI Debuts Agent Builder and AgentKit

OpenAI’s AgentKit consolidates agent development into one product line: a visual Agent Builder for composing multi-agent workflows with guardrails and versioning; ChatKit for embedding customizable chat UIs; and expanded Evals with datasets, trace grading, automated prompt optimization, and support for third-party models. Reinforcement fine-tuning is available via GA on o4-mini and in private beta for GPT-5, with new options for custom tool-calling and graders. Agent Builder is launching in beta, while ChatKit and Evals are shipping generally. OpenAI notes that these features are included under the standard API model pricing.

3. Thinking Machines Has Released Tinker

Thinking Machines has released Tinker, a Python API that enables researchers and engineers to write training loops locally while the platform executes them on managed, distributed GPU clusters. Tinker exposes low-level primitives; not high-level “train()” wrappers. Core calls include forward_backward, optim_step, save_state, and sample, providing users with direct control over gradient computation, optimizer stepping, checkpointing, and evaluation/inference within custom loops. Tinker is positioned as a managed post-training platform for open-weight models, ranging from small LLMs to large mixture-of-experts systems.

4. DeepSeek Released DeepSeek-V3.2-Exp

DeepSeek has released DeepSeek-V3.2-Exp, an “intermediate” update to V3.1 that adds DeepSeek Sparse Attention (DSA), a trainable sparsification path designed for long-context efficiency. DeepSeek-V3.2-Exp keeps the V3/V3.1 stack (MoE + MLA) and inserts a two-stage attention path: (i) a lightweight “indexer” that scores context tokens; (ii) sparse attention over the selected subset. It also reduced API prices by 50% or more, consistent with the stated efficiency gains.

5. Z.ai Launches GLM-4.6 Model

GLM-4.6 arrives with a 200K token context, emphasis on agentic coding and long-context tasks, and open weights (multiple checkpoints) available on Hugging Face; Zhipu highlights improved coding performance and broader tool/agent routing, with international coverage echoing the push. For RAG and agent stacks that need wide windows and local deployment, this is a notable open-weight option from a major Chinese lab.

6. Google DeepMind Shares CodeMender Agent

DeepMind unveiled CodeMender, an agent that combines Gemini’s “Deep Think” with static/dynamic analysis, fuzzing, and automated reasoning to propose and upstream real patches. Google reports that dozens of fixes have already been merged across open-source projects and describes a workflow aimed at continuous hardening rather than one-off bug suggestions.

Five 5-minute reads/videos to keep you learning

1. Beyond the Prompt: How Agentic AI Patterns Are Revolutionizing the Way We Work With LLMs

This article advocates for a shift from crafting perfect prompts to designing intelligent agentic frameworks to create more effective AI systems. It introduces five key patterns for LLMs: Reflection for self-correction, Tool Use for accessing external data, ReAct for iterative problem-solving, Planning for breaking down tasks, and Multi-Agent systems for collaborative work. Supported by code examples, the piece also addresses critical production considerations, such as security and monitoring.

2. Petri: An Open-Source Auditing Tool To Accelerate AI Safety Research

In this article, Anthropic presents Petri (Parallel Exploration Tool for Risky Interactions), their new open-source tool that enables researchers to explore hypotheses about model behavior. Petri deploys an automated agent to test a target AI system through diverse multi-turn conversations involving simulated users and tools; Petri then scores and summarizes the target’s behavior. This article outlines the tool’s functionality, its operational mechanism, and the results of the pilot tests.

3. Activation Steering: The Zero-Training Revolution That’s Making AI Models Actually Listen

This article presents Activation Steering, a method for controlling AI model behavior in real-time, as an alternative to the costly process of fine-tuning. The technique addresses issues like model hallucinations by creating a “steering vector” from the difference in neural activations between desired and undesired outputs. This vector is then applied during inference to guide the model toward better context adherence and truthfulness. The article outlines two specific methods: the Options and Contrastive approaches, demonstrating a practical, training-free way to improve the reliability and precision of AI responses.

4. Context Engineering for LLMs: Build Reliable, Production-Ready RAG Systems

This guide discusses “Context Engineering” as a technique to build reliable, production-ready RAG systems. It covers essential techniques such as effective chunking strategies, metadata enrichment, and improving retrieval quality through hybrid search and re-ranking. It also provides a template for production-safe prompts that emphasizes structured JSON outputs, strict evidence use, and clear refusal policies to mitigate common failures, such as hallucination and context overflows.

5. Autogen Conversable Agent: Complete Overview

This blog provides a comprehensive overview of Autogen’s Conversable Agent, detailing its role in creating multi-agent systems. It outlines various agent types and demonstrates, through practical code examples, how multiple agents can collaborate sequentially on complex workflows, such as generating project ideas and summaries. It also focuses on integrating custom tools, showing how agents can call and execute specific Python functions to perform data operations.

Repositories & Tools

1. CoDa is a 1.7B-parameter diffusion coder trained on TPU with a fully open-source pipeline.

2. GLM 4.6 is an advanced agentic and reasoning model, with advancements across real-world coding, long-context processing (up to 200K tokens), reasoning, search, writing, and agentic applications.

Top Papers of The Week

1. Pre-Training Under Infinite Compute

Researchers studied pre-training under infinite compute by using larger weight decay and ensemble models. This method yields data efficiency gains with reduced overfitting, improving validation loss and downstream benchmarks, and achieves a 9% enhancement in pre-training evaluations. This method, involving regularization, parameter scaling, and distillation, offers significant data efficiency improvements using fewer tokens and smaller models.

The paper introduces Apriel-1.5–15B-Thinker, a 15-billion-parameter multimodal reasoning model achieving frontier-level performance with minimal computational resources. This model utilizes progressive training from Pixtral-12B, focusing on depth upscaling, staged pre-training, and text-only fine-tuning, to achieve competitive results on benchmarks and facilitate accessible multimodal reasoning for organizations with limited infrastructure.

3. Self-Forcing++: Towards Minute-Scale High-Quality Video Generation

Researchers have developed a method to reduce quality degradation in long-horizon video generation without needing long-video teacher models or extensive retraining. By leveraging teacher models’ knowledge and self-sampling, the method maintains temporal consistency and generates videos up to 4 minutes and 15 seconds. This approach significantly enhances performance in both fidelity and consistency compared to baseline methods.

4 . The Illusion of Readiness: Stress Testing Large Frontier Models on Multimodal Medical Benchmarks

This research demonstrates how benchmarks vary widely in what they truly measure, yet are often treated interchangeably, thereby masking failure modes. By stress-testing six flagship models across six widely used benchmarks, this study demonstrates that leaderboard scores can mask brittleness and lead to shortcut learning. It especially cautions that medical benchmark scores do not directly reflect real-world readiness.

5. TUMIX: Multi-Agent Test-Time Scaling With Tool-Use Mixture

This paper introduces TUMIX (Tool-Use Mixture), a test-time framework that ensembles heterogeneous agent styles (text-only, code, search, and guided variants) and allows them to share intermediate answers over a few refinement rounds, then stops early via an LLM-based judge. Across tasks, TUMIX averages +3.55% over the best prior tool-augmented test-time scaling baseline at a similar cost, and +7.8% / +17.4% over no scaling for Pro/Flash, respectively.

Quick Links

1. Microsoft released the Microsoft Agent Framework, an open-source SDK and runtime that unifies core ideas from AutoGen (agent runtime and multi-agent patterns) with Semantic Kernel (enterprise controls, state, plugins) to help teams build, deploy, and observe production-grade AI agents and multi-agent workflows. The framework is available for Python and .NET and integrates directly with Azure AI Foundry’s Agent Service for scaling and operations.

2. OpenAI partners with AMD on GPUs. OpenAI to deploy 6 gigawatts of AMD GPUs, supplementing NVIDIA capacity to meet inference demands. The deal supports scaling features like GPT-5 Pro, with emphasis on diversified compute for reliability.

Who’s Hiring in AI

AI Deployment Strategist @Mistral AI (Luxembourg)

Manager — GenAI & Agentic AI Engineering @IBM (Kochi, India)

AI Creative Specialist @RYZ Labs (Remote)

Prompt Engineer and Data Analyst @Welocalize (Remote/USA)

AI Engineer @Remofirst (Remote)

Engineer — Artificial Intelligence @PEXA Group (Melbourne, Australia)

AI Agent Engineer @Mirakl — Labs (Remote/France)

Interested in sharing a job opportunity here? Contact sponsors@towardsai.net.

Think a friend would enjoy this too? Share the newsletter and let them join the conversation.