TAI #166: The GenAI Paradox: Superhuman Models but Mixed Success with Enterprise AI Developments

Also, GPT-5's medical leap, Mistral Medium 3.1, Claude's million-token leap, NVIDIA's Granary dataset, and more.

What happened this week in AI by Louie

Now that the dust has settled on GPT-5’s release, we’re seeing specific tasks where the new model adds substantial value, and the first wave of custom benchmark tests is starting to land. This week, two new studies offer a sharp view of where AI is already biting into high-stakes professional work: one shows GPT-5’s capabilities in complex medical reasoning, and another shows an AI voice agent outperforming humans in real-world job interviews.

First, a new paper on the capabilities of GPT-5 in multimodal medical reasoning provides a controlled evaluation of the model across a suite of medical benchmarks. On the MedXpertQA multimodal benchmark, which requires reasoning over both text and images, GPT-5 beats GPT-4o by a wide margin (+29.26% in reasoning, +26.18% in understanding) and also surpasses pre-licensed human experts on the same tasks (+24.23% and +29.40% respectively). The paper’s case study shows GPT-5 integrating visual and textual cues into a coherent diagnostic chain, exactly the kind of synthesis clinicians do. As a useful nuance, on the smaller VQA-RAD dataset, GPT-5-mini slightly outscored the full model, evidence that right-sizing can beat brute-force scaling for niche tasks.

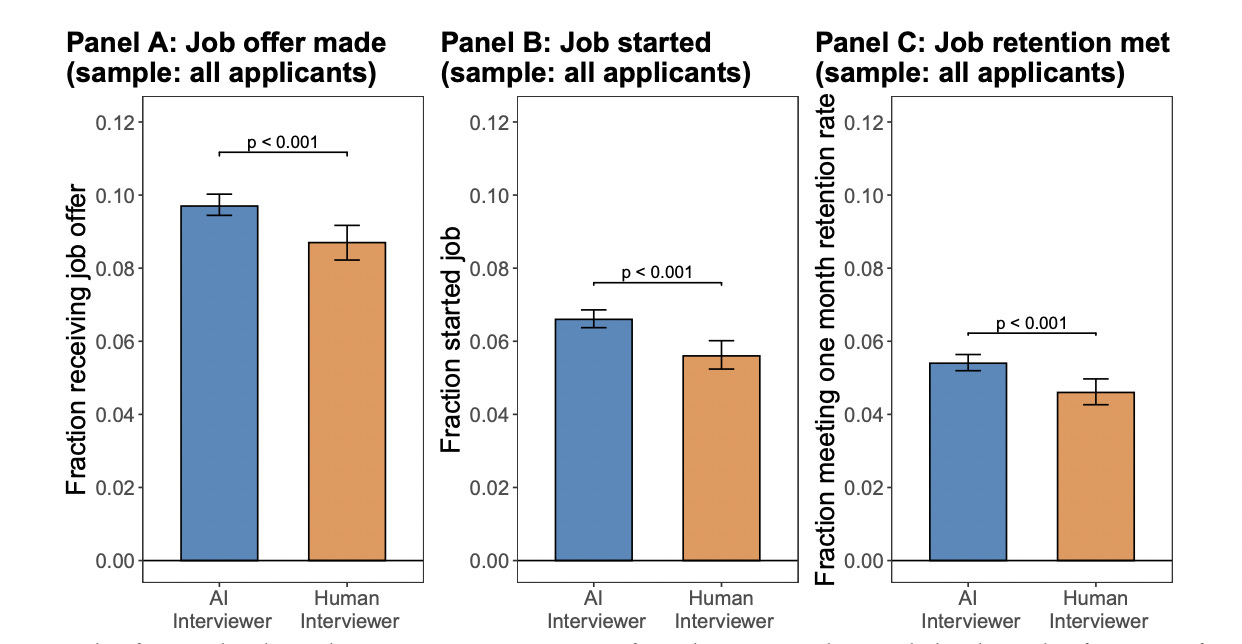

Second, a study on voice AI in firms reports on a large natural field experiment with over 70,000 applicants for customer service roles in the Philippines. It randomized interviews to a human recruiter, an AI voice agent, or a choice between the two, with humans always making the final hiring decision after reviewing the transcript. The headline results are striking: AI-led interviews produced 12% more job offers, 18% more job starts, and 17% higher 30-day retention. When offered the choice, 78% of applicants picked the AI interviewer. Transcript analysis suggests the mechanism is ‘consistency’, as the AI conducted more comprehensive interviews and elicited more hiring-relevant signals. Reported gender discrimination by the interviewer also nearly halved under AI.

Note: The figure displays the recruiting outcomes of applicants. Each panel displays the fraction of applicants who realize the specific outcome. Fractions are displayed separately for the Human Interviewer condition, in which applicants are interviewed by a human, and for the AI Interviewer condition, in which applicants are interviewed by an AI voice agent. Bars indicate 95% confidence intervals; p-values calculated from a two-sample proportion test.

While these studies show clear capability and impact, a new report from MIT’s NANDA initiative, The GenAI Divide, paints a sobering enterprise picture. It finds that only about 5% of corporate AI pilots drive rapid revenue acceleration; most stall due to a learning and integration gap, not model quality. The research shows that purchasing specialized tools and partnering succeed roughly two-thirds of the time, while internal builds succeed only about one-third as often. Budgets are also misaligned, overweighting sales and marketing where ROI is weakest, while back-office automation delivers outsized returns.

Why should you care?

We’re living in a paradox: frontier models are demonstrating superhuman capabilities on targeted, controlled tasks, yet their impact in the enterprise lags because adoption is fundamentally a process problem, not a technology one. The MIT report confirms what many of us have suspected: most companies are mismanaging the AI transition. They favor costly and slow internal builds that fail two-thirds of the time. They spend far too long trying to build complex harnesses and fine-tuning pipelines around smaller models, attempting to coax out performance that leading proprietary models deliver off-the-shelf. This focus also means they are failing to design systems around the end-users themselves, struggling to make AI seamlessly fit into existing workflows or to bring humans into the loop where their judgment is most needed. Critically, they are not bringing industry experts into the development process, and they are not training their staff to use these powerful new tools effectively.

The interview study offers a direct blueprint for how to get this right. By separating the interaction from the adjudication, letting the AI gather structured signals and having humans make the final judgment, companies can de-risk adoption and unlock immediate gains. For high-value work, this means redesigning workflows around consistent, auditable signal capture, whether in sales discovery, insurance intake, or patient triage, with humans in the loop where it matters. For builders, two pragmatic lessons jump out: right-size the model to the data and task, and favor a buy-then-integrate strategy when speed and reliability are paramount.

This all points to a massive and growing competency gap. It’s why we’ve split our academy into two clear pathways. Our AI Engineering Pathway is designed for those who want to build AI. Whether you’re a complete coding beginner needing to learn Python from scratch, or one of the millions of developers who have tinkered with APIs but now want to master the full engineering skillset — not just build surface-level demo projects — we have a starting point for you. For the much larger group of professionals who need to master using AI to excel in their existing roles, our AI for Work Pathway teaches the deep, practical skills to deploy AI across professional tasks such as writing, research, brainstorming, data analysis, and strategy with no coding required.

— Louie Peters — Towards AI Co-founder and CEO

Hottest News

1. NVIDIA AI Just Released the Largest Open-Source Speech AI Dataset and Models

NVIDIA has unveiled Granary, the largest open-source speech dataset for European languages, along with two new models: Canary-1b-v2 and Parakeet-tdt-0.6b-v3. Granary includes around one million hours of audio, 650,000 for speech recognition and 350,000 for translation. Canary-1b-v2, a billion-parameter encoder-decoder model, delivers high-quality transcription and translation across English and 24 European languages. Parakeet-tdt-0.6b-v3, with 600M parameters, is optimized for high-throughput transcription across all 25 supported languages.

2. Anthropic’s Claude AI Model Can Now Handle Longer Prompts

Anthropic has updated Claude Sonnet 4 to support prompts up to 1 million tokens, five times its previous 200,000 limit and more than double the 400,000-token window offered by OpenAI’s GPT-5. The expanded context support is now in public beta via the Anthropic API and Amazon Bedrock, with Google Vertex AI support coming soon.

3. Mistral AI Unveils Mistral Medium 3.1

Mistral AI has open-sourced Mistral Medium 3.1, bringing improvements in reasoning, coding, and multimodal performance. Benchmarks show the model performing at or above the level of leading systems, including Llama 4 Maverick, Claude Sonnet 3.7, and GPT-4o, particularly in long-context and multimodal tasks.

4. OpenAI Updates GPT-5 To Be “Warmer and Friendlier”

OpenAI has introduced subtle changes to GPT-5’s conversational style, aiming for a tone that feels “warmer and friendlier.” The model now adds light acknowledgments such as “Good question” or “Great start” in responses. OpenAI emphasized that internal testing shows no increase in sycophancy compared to the prior GPT-5 release.

5. OpenAI Priced GPT-5 So Low That It May Spark a Price War

Alongside the launch, OpenAI priced GPT-5 significantly lower than rivals like Anthropic’s Claude Opus 4.1 and even below its own GPT-4o model. Developers have called the model “cheaper and more capable,” raising expectations that the move could intensify competition and trigger a price war in the LLM market as Meta, Alphabet, and others continue ramping up infrastructure investments.

Five 5-minute reads/videos to keep you learning

1. LangGraph + MCP + GPT-5 = the Key to Powerful Agentic AI

This article demonstrates how to create a multi-tool AI agent by combining LangGraph with MCP (Model Context Protocol) and GPT-5. It explains how MCP servers manage external tools, such as YouTube transcript extraction or web search, and shows how LangGraph orchestrates them into a cohesive workflow. It also includes a detailed coding guide, demonstrating how these components can be integrated into a chatbot that handles complex, multi-step tasks.

Focusing on OpenAI’s latest model, this guide highlights how GPT-5 improves tool use, instruction-following, and agentic reasoning. It provides practical prompting strategies to encourage desired behaviors and shows how the Responses API can make multi-step tasks more efficient, particularly in coding and workflow automation.

3. The Hidden Potential of RAG Pipelines in Augmented Analytics for Non-Experts

This piece explores how Retrieval-Augmented Generation (RAG) can bring advanced analytics within reach of non-technical users. It explains how RAG pipelines retrieve information from large datasets, such as IoT data streams, before generating context-aware outputs with an LLM. It also provides a practical walkthrough covering semantic chunking, embedding, and indexing with FAISS to improve retrieval accuracy and answer quality.

Addressing the challenge of navigating complex legal documents, this article outlines how to build a legal search agent. The system uses Qdrant’s hybrid vector search, combining dense semantic vectors with sparse keyword matching, and integrates LlamaIndex with a Groq LLM to create a function-calling agent. The workflow covers document ingestion, vectorization, and query handling, showing how AI can streamline legal reasoning and discovery.

5. DSPy Pipelines & Compilers in Depth

This article examines DSPy, a framework for structuring AI reasoning as programmatic pipelines that can be automatically optimized by a compiler. It discusses how DSPy reduces reliance on manual prompt engineering, details deployment with FastAPI, and shows how monitoring can be managed with MLflow. It also discusses practical applications, covering production deployment with FastAPI, monitoring via MLflow, and showcasing real-world case studies.

Repositories & Tools

1. Open-lovable is an open-source app enabling users to clone and recreate any website as a modern React app quickly.

2. DINOv3 is a self-supervised computer vision model trained on 1.7 billion images with a 7 billion parameter architecture.

3. Parlant is a production-ready engine for AI chat agents that generate aligned responses and follow instructions.

4. Dots.ocr is a multilingual document parser that unifies layout detection and content recognition within a single vision-language model.

Top Papers of The Week

1. Training Long-Context, Multi-Turn Software Engineering Agents with Reinforcement Learning

This paper introduces a reinforcement learning framework for training long-context, multi-turn software engineering agents using a modified Decoupled Advantage Policy Optimization (DAPO) algorithm. It demonstrates a two-stage learning pipeline for training a Qwen2.5–72B-Instruct agent: Rejection Fine-Tuning and Reinforcement Learning Using Modified DAPO.

2. ReasonRank: Empowering Passage Ranking with Strong Reasoning Ability

This paper proposes ReasonRank, a novel passage ranking method that leverages LLMs to improve listwise ranking by synthesizing reasoning-intensive training data and employing a two-stage post-training approach. This approach includes supervised fine-tuning and reinforcement learning with a multi-view ranking reward. ReasonRank significantly outperforms existing baselines and achieves state-of-the-art performance on the BRIGHT leaderboard with low latency.

3. Grove MoE: Towards Efficient and Superior MoE LLMs with Adjugate Experts

This paper introduces Grove MoE, a novel Mixture of Experts architecture that groups heterogeneous experts with shared “adjugate” experts, enabling dynamic parameter activation based on token complexity. The resulting 33B-parameter GroveMoE models achieve state-of-the-art efficiency and performance, activating only ~3.14–3.28B parameters per token while matching or surpassing similarly sized open-source LLMs across reasoning, math, and coding benchmarks.

4. WebWatcher: Breaking New Frontier of Vision-Language Deep Research Agent

This paper introduces WebWatcher, a multi-modal agent for Deep Research. It utilizes synthetic multimodal trajectories for efficient training and tools for deep reasoning, improving generalization via reinforcement learning. In BrowseComp-VL benchmark tests, WebWatcher surpasses proprietary and open-source agents in complex information retrieval involving both visual and textual information.

5. NextStep-1: Toward Autoregressive Image Generation with Continuous Tokens at Scale

This paper introduces NextStep-1, a 14B autoregressive model combined with a 157M flow matching head. This innovation trains on discrete text and continuous image tokens, achieving state-of-the-art performance in high-fidelity image synthesis and image editing.

Quick Links

1. Salesforce AI Research has unveiled Moirai 2.0, the latest time series foundation models. It is 44% faster in inference and 96% smaller in size compared to its predecessor, and claimed the #1 spot on the GIFT-Eval benchmark.

2. Hugging Face has just released AI Sheets, a free, open-source, and local-first no-code tool designed to simplify dataset creation and enrichment with AI. It is a spreadsheet-style data tool purpose-built for working with datasets and leveraging AI models.

Who’s Hiring in AI

AI Engineer, Product, (Le Chat) @Mistral AI (Paris/ London)

Research Scientist — Trustworthy AI @NVIDIA (US/Remote)

Mid Back-end Engineer (NodeJS) — Spinnaker @SwingDeva hippo company (Warsaw/Remote)

Research Scientist — LLM Foundation Models @Binance (Multiple Countries/Remote)

Intermediate Software Engineer SRE — AI @PointClickCare (Ontario, Canada)

NLP & LLM Data Scientist — Healthcare & Life Sciences @Norstella (Multiple US Locations)

Sr. Software Engineer (GenAI-focused) @DoiT (Remote/Sweden)

Interested in sharing a job opportunity here? Contact sponsors@towardsai.net.

Think a friend would enjoy this too? Share the newsletter and let them join the conversation.

Bookmark? Sure. Benchmark is better. Who’s dropping real cases and numbers?