TAI #163: AI Unlocking History's Secrets; Deepmind's Aeneas Continues A Recent Trend

Also, Gemini's 980 trillion tokens, Qwen3‑Coder, Qwen‑mt‑turbo, and the White House AI Playbook.

What happened this week in AI by Louie

This week, Google put the scale of the recent LLM adoption takeoff into perspective, revealing that Gemini processed 980 trillion tokens in June 2025. This is up 2x from 480 trillion in April 2025 and 100x from just 9.7 trillion in April 2024. While direct comparisons are tricky, OpenAI’s API traffic was reported by The Information at ~14 trillion tokens per week at the end of 2024, and Deepseek processed over 5 trillion tokens per week in March 2025. The Gemini figure, roughly 20x OpenAI’s late-2024 rate, underscores both the recent take-off of LLM usage and Google’s success in catching up to the frontier. While not all tokens are created equal, Flash-lite is far less compute-heavy than Pro 2.5, and many of these may come from Google Search’s AI Overviews rather than direct user interaction; the numbers are still an indicator of the huge scale at which LLMs are now being used. For context, Gemini’s June token usage is enough to process the Lord of the Rings Series 1.5 billion times!

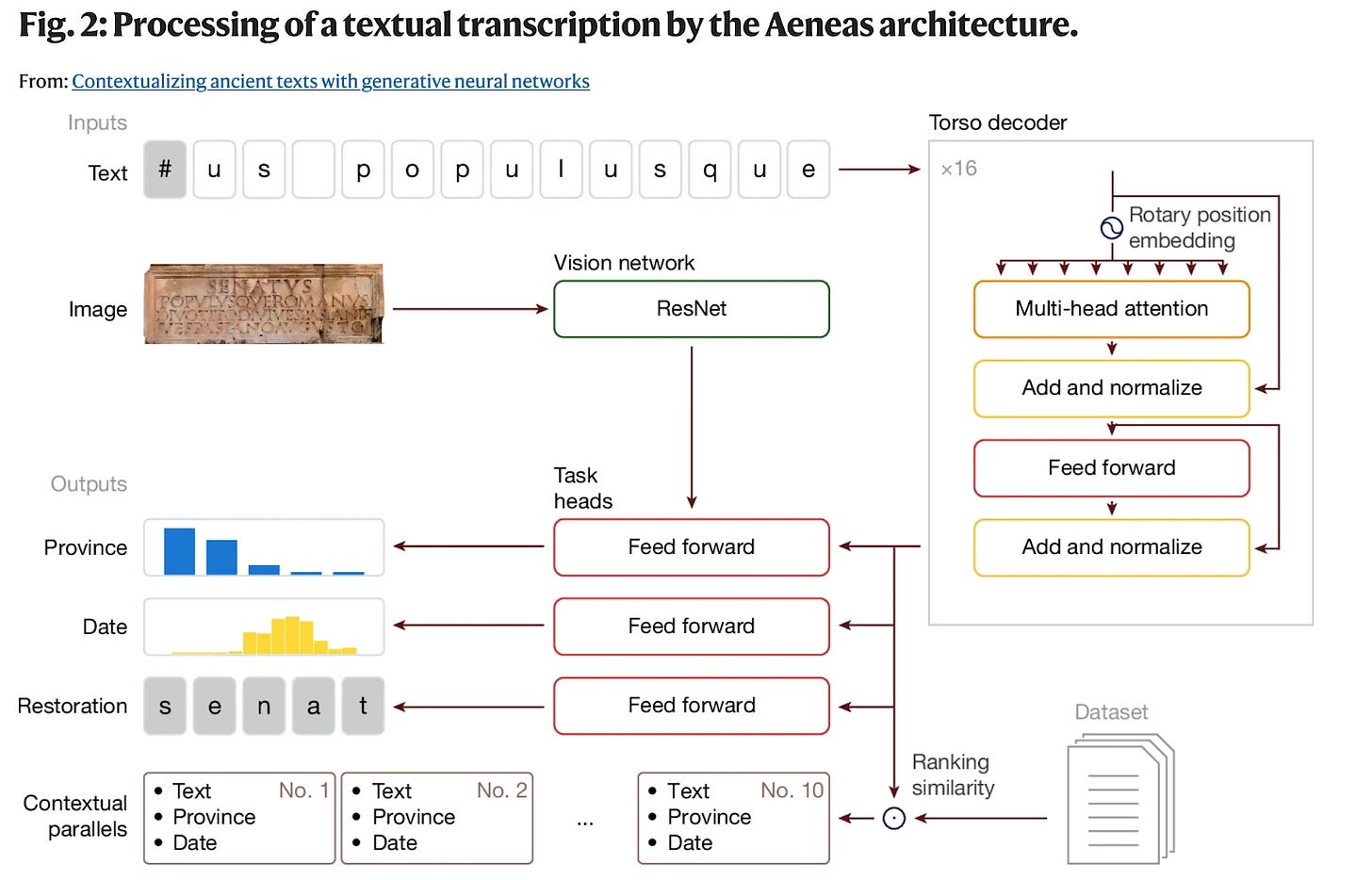

While LLMs are processing the present at an unprecedented rate, another fascinating application of AI is emerging: its ability to decode the past. This week, Google DeepMind published a paper in Nature on Aeneas, an AI model designed to help historians restore, date, and, crucially, contextualize ancient Roman inscriptions. It’s a custom multimodal generative neural network, not a standard LLM, that processes both the text and images of inscriptions. Aeneas’s key feature is its “Parallels Search,” which encodes each of the 176,000 inscriptions in its dataset into a rich numerical “embedding” — a kind of historical fingerprint. It can then retrieve texts with similar phrasing or formulas in seconds, a task that would take an expert weeks. The model also sets a new state-of-the-art in restoring damaged texts and is the first that can fill gaps of unknown length. Using both linguistic cues and visual data from images of the stone, it can attribute an inscription to one of 62 Roman provinces with 72% accuracy and date it to within 13 years of its true range.

This is just the latest in a recent series of incredible AI-driven historical and archaeological discoveries. In February 2024, the Vesuvius Challenge used a custom computer vision pipeline to virtually unroll and read the 2,000-year-old Herculaneum papyrus scrolls, which were carbonized by the eruption of Mount Vesuvius, revealing over 2,000 characters of a lost Greek philosophical text. In June 2025, the Enoch model combined handwriting analysis with radiocarbon data to re-date the Dead Sea Scrolls, suggesting many fragments are decades older than previously thought. Textual reconstruction has also seen major progress. The cuneiBLAST project, with results reported through 2024, has been using a custom pattern-recognition algorithm to piece together the 4,000-year-old Epic of Gilgamesh from thousands of scattered cuneiform tablet fragments. Even hieroglyphs are being tackled; the Hieroglyphic Transformer, published at ACL in 2024, fine-tuned a standard translation model to translate Middle-Egyptian with competitive accuracy.

Beyond texts, AI is being used to discover new archaeology. By applying custom deep learning models to aerial imagery, a project at Yamagata University in early 2024 identified 303 new Nazca geoglyphs in Peru. Similarly, a March 2025 study used a segmentation model on LiDAR data to map over 1,000 hidden reservoirs around Angkor, cutting manual mapping time by 90%.

Why should you care?

These recent AI-driven historical and archaeological discoveries are the result of a powerful convergence: decades of painstaking work gathering clues together with more recent digitization have finally created a “data gravity” that now combines with mature AI architectures powered by accessible compute. For centuries, history and archaeology have been slow, manual processes, often relying on the chance discovery or the encyclopedic memory of a single expert. AI can transform this into a systematic, scalable, and data-driven science.

Crucially, this is a story of augmented intelligence, not artificial replacement. In every successful case, AI serves as a powerful collaborator. Aeneas suggests restorations, but historians must use their judgment to verify them. The AI flags a potential Nazca line or Maya ruin, but archaeologists must confirm it on the ground. This human-in-the-loop model is proving to be incredibly effective, freeing up experts from tedious grunt work to focus on higher-level interpretation and analysis.

We most often discuss AI’s potential value in boosting work productivity and quality, or sometimes aiding health and medicine, but it has exciting applications emerging across many other fields. The potential for future discoveries is immense. The same computer vision techniques used on the Vesuvius scrolls could be applied to other unreadable documents, from charred texts in Byzantine churches to papyrus fragments in mummy cartonnage. The inscription models built for Latin and Greek could be adapted to decipher everything from Viking runes to Egyptian hieroglyphs. The remote sensing methods that uncovered a Maya megalopolis could be deployed over the Amazon or the Sahara to find other lost settlements.

Going forward, we will likely see proactive LLM agents that continuously scan newly digitized archives and satellite feeds, automatically flagging new connections or potential sites for researchers. For anyone building with AI, one takeaway is clear: investing first in creating, curating, or collecting unique and original datasets can lead to the most profound discoveries and defensible products!

— Louie Peters — Towards AI Co-founder and CEO

Hottest News

1. Google Is Testing a Vibe-Coding App Called Opal

Google is piloting Opal through Google Labs in the U.S. This tool lets users create mini web apps using natural language prompts or by remixing existing examples from a gallery. Once generated, apps can be refined in a visual editor, where each workflow step is visible and editable, and additional steps can be added via the toolbar.

2. Alibaba Released a New Agentic Coder Model

Alibaba has introduced Qwen3‑Coder, an open‑source AI model designed for software development. Its flagship version, Qwen3‑Coder‑480B‑A35B‑Instruct, employs a mixture‑of‑experts architecture with 480 billion parameters, 35 billion active at a time, and supports a 256,000‑token context window, expandable to one million. Alongside the model, Alibaba released Qwen Code, a command‑line tool tailored for Qwen3‑Coder with updated prompts and function‑call support.

3. GitHub Introduces Vibe Coding With Spark

GitHub has opened a public preview of Spark, a platform for Copilot Pro+ users that can generate full‑stack web applications from plain English descriptions. Spark builds front‑end and back‑end logic, connects databases, and handles deployment. A visual editor allows users to adjust prompts, review workflows, and export completed apps or code repositories.

4. The U.S. White House Releases AI Playbook

The White House published “Winning the Race: America’s AI Action Plan,” a 28‑page roadmap outlining more than 90 actions to accelerate AI innovation, expand domestic infrastructure, and strengthen U.S. leadership in global AI. The plan calls for streamlined approvals for chip manufacturing and data centers, updated export policies, and initiatives to establish international standards. It follows Executive Order 14179, issued in January, which mandated the development of this national strategy.

5. Alibaba Qwen Introduces Qwen3-MT

Alibaba has also released Qwen3‑MT (qwen‑mt‑turbo) via its Qwen API. Trained on trillions of multilingual tokens, it supports over 92 languages and ranks among the top performers on benchmarks such as Chinese-English and English-German, surpassing models like GPT-4.1-mini and Gemini‑2.5‑Flash.

Five 5-minute reads/videos to keep you learning

This article presents a framework for building an adaptive portfolio strategy by analyzing the historical decisions of multiple fund managers. It uses the Analytic Hierarchy Process (AHP) to establish clear, governance-driven rules for balancing risk and return, alongside a preference model that identifies successful patterns from past trajectories. A Twin Delayed Deep Deterministic Policy Gradient (TD3) agent combines inputs from both AHP and the preference model as a reward signal to optimize daily weight adjustments. The approach delivers an explainable, automated strategy that merges human-defined priorities with AI-driven optimization.

2. OneRec-A Recommendation Model Based on Large Language Model

The article introduces OneRec, a recommendation framework built around a single encoder–decoder architecture inspired by large language models. It explains how OneRec consolidates traditional multi-stage recommendation systems into an end-to-end pipeline, where user behavior and item data are tokenized into semantic IDs and fed directly into the model to generate recommendation lists.

3. Building a Supply Chain Agent with CrewAI

This article walks through building a multi-agent system for a supply chain task using CrewAI and OpenAI’s API. The workflow mirrors the two-agent setup from the previous example: one drafts an RFQ, and the other evaluates carriers using a custom-built Python tool to score and rank them. The step-by-step configuration illustrates how these agents can be integrated to produce both a finalized RFQ document and a carrier recommendation report, demonstrating the practical application of multi-agent architectures.

4. Deep Research with OpenAI’s API Key

This piece demonstrates how to build a cost-effective deep-research agent using OpenAI’s API as an alternative to ChatGPT’s subscription-based deep research features. The guide explains how to orchestrate research workflows by combining OpenAI’s deep research models (o3-deep-research and o4-mini-deep-research), web search tools, and code interpretation in Python. It walks through setting up an iterative research loop with a primary “Research Agent,” a “Critique Agent,” and a “Report Agent” to validate and refine outputs while tracking costs.

5. AI Agents vs Agentic AI: The Mind-Blowing Difference That Will Change Everything

This article examines the transition from conventional, task-specific AI agents to more autonomous agentic AI systems. While standard agents execute predefined commands, agentic AI can set goals, learn from outcomes, and interpret context to act proactively. Through real-world business and consumer examples, the author shows how agentic systems can coordinate multiple agents to deliver more comprehensive solutions. The article also addresses challenges around trust, oversight, and accountability as AI systems become more independent.

Repositories & Tools

1. Llama-3.3-Nemotron-Super-49B-v1.5 is a reasoning model that is post-trained for reasoning, human chat preferences, and agentic tasks, such as RAG and tool calling.

2. WrenAI is an open-source Generative Business Intelligence (GenBI) agent designed for natural-language interaction with structured data.

Top Papers of The Week

1. Inverse Scaling in Test-Time Compute

This study examines inverse scaling in Large Reasoning Models (LRMs), showing that extending reasoning length can reduce accuracy across tasks such as counting, regression, deduction, and AI risk evaluation. The authors identify failure modes, including distraction, overfitting, and loss of focus, that emerge at longer reasoning chains, emphasizing the need to assess and address performance degradation at varying reasoning lengths.

2. Beyond Context Limits: Subconscious Threads for Long-Horizon Reasoning

The paper introduces TIM, a transformer-based LLM trained to perform structured, recursive reasoning by decomposing problems into a tree of subtasks, and TIMRUN, its inference engine that prunes irrelevant memory to bypass context window limits. Together, TIM and TIMRUN enable efficient long-horizon reasoning and multi-hop tool use in a single inference pass, outperforming agent-based systems in both accuracy and throughput without requiring post-training or prompt engineering.

3. Group Sequence Policy Optimization

Researchers present GSPO, a reinforcement learning method that improves the efficiency and stability of LLM training. Unlike token-level approaches, GSPO operates on full sequences, using importance ratios at the sequence level. It outperforms the GRPO algorithm, stabilizes Mixture‑of‑Experts RL training, and simplifies RL infrastructure design, contributing directly to performance gains in Qwen3 models.

4. GUI-G2: Gaussian Reward Modeling for GUI Grounding

GUI‑G2 introduces a Gaussian reward modeling framework for GUI grounding, improving performance by 24.7% on the ScreenSpot‑Pro benchmark. By representing interface elements as continuous Gaussian distributions, GUI‑G2 transforms GUI grounding into a continuous optimization problem, enhancing spatial reasoning, robustness to interface variations, and generalization to unseen layouts.

5. An emergent safety vulnerability of Diffusion LLMs

This study reveals safety vulnerabilities in diffusion-based LLMs (dLLMs) using DIJA, a framework that exploits masked-input adversarial prompts. DIJA surpasses prior jailbreak methods, exposing weaknesses in current alignment approaches. The authors report attack success rates as high as 100% on Dream‑Instruct datasets, underscoring the urgency of reassessing safety protocols for dLLMs.

Quick Links

1. Google DeepMind developed Aeneas, a transformer-based generative neural network that performs restoration of damaged text segments, chronological dating, geographic attribution, and contextualization through the retrieval of relevant epigraphic parallels. Aeneas is trained on the Latin Epigraphic Dataset (LED), an integrated and harmonized corpus of 176,861 Latin inscriptions aggregating records from three major databases.

Who’s Hiring in AI

Cloud & AI Solution Engineer — AI Applications @Microsoft Corporation (Toronto, Canada)

Strategic Partner Development, Product Partnerships @Anthropic (New York/San Francisco)

Senior Generative AI Research Engineer @NVIDIA (Remote, USA)

AI Visual Content Producer @Newsweek (Remote, UK)

Lead AI Engineer @TEKsystems (Remote)

GenAI Engineering Manager @Turing (New York, NY, USA)

Senior Python Software Engineer @Backbase (Amsterdam, Netherlands)

Interested in sharing a job opportunity here? Contact sponsors@towardsai.net.

Think a friend would enjoy this too? Share the newsletter and let them join the conversation.

I thoroughly loved your piece about AI's role in uncovering historical secrets! It's remarkable how technologies like DeepMind's Aeneas can revive ancient stories https://slopefree.io

This is a fascinating overview of the advancements in AI and its impact on historical research! The developments with models like Aeneas are truly groundbreaking. It's exciting to see how AI can enhance our understanding of the past. Speaking of innovation, have you checked out the game Wheelie Life https://wheelielife.io/ It’s a fun way to experience creativity and challenges in a different context!