TAI #162: The Agentic Era of AI: From IMO Gold to Real-World Work with ChatGPT Agent

Also; xAI companions, Mistral Voxtrol, Amazon Agentcore, Anthropic’s new transparency framework and more

What happened this week in AI by Louie

LLMs crossed the 100‑minute reasoning horizon this week while AI developments also showcased the incredible potential of frontier models, the aggressive competitive race to announce key capability milestones and the messy reality of bringing those capabilities into a product. The central theme was the clear arrival of agentic and more “general” AI, a significant evolution towards models that can interact with existing data formats and user interfaces, autonomously act and solve complex, multi-step problems.

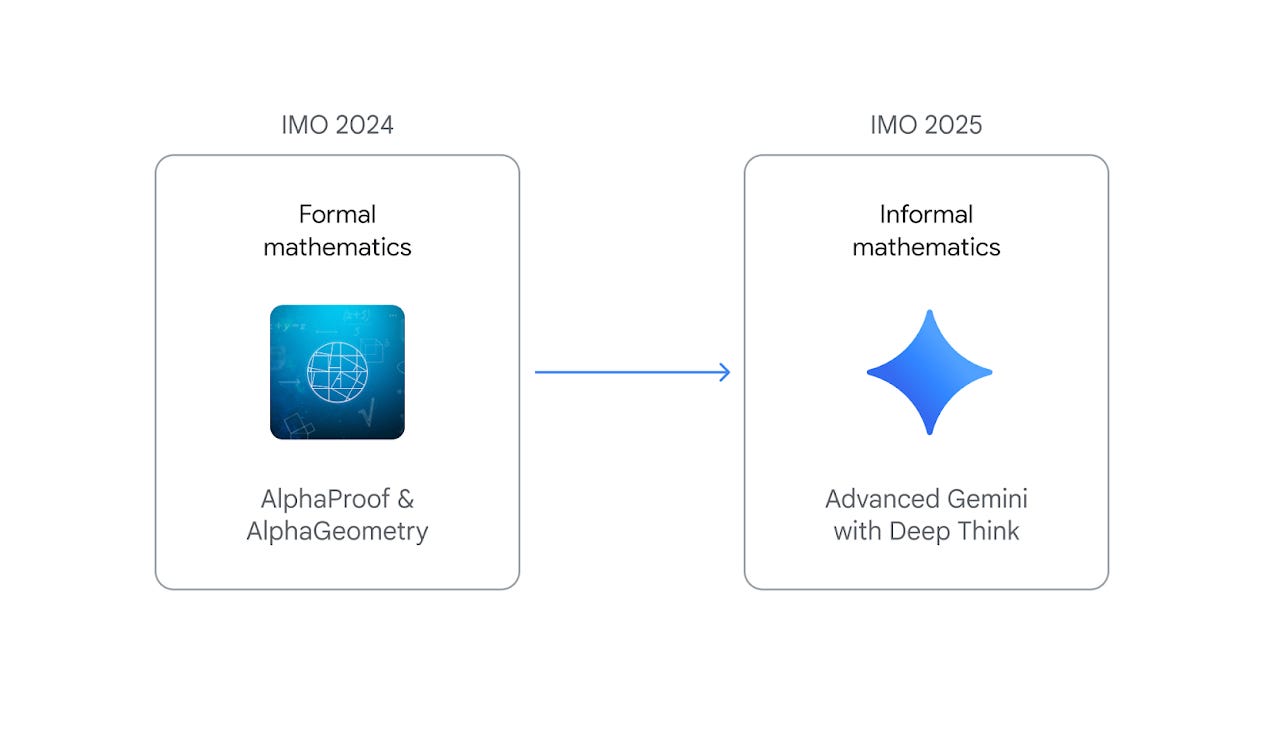

The headline achievement came from the International Mathematical Olympiad (IMO), where both OpenAI and Google DeepMind announced their models had achieved gold-medal standard performance. This is a profound milestone. IMO problems are not about rote calculation; they demand sustained, creative reasoning over hours. As OpenAI researcher Alexander Wei framed it, the reasoning time horizon for AI has now progressed from seconds (grade-school math) to minutes (AIME benchmark) and now to over 100 minutes for IMO-level tasks. This year, both labs used LLMs to produce human-readable proofs in natural language within the 4.5-hour competition time limit, a huge leap from last year’s specialized AI system which operated with different rules and data formats to their human IMO competitors.

Google DeepMind credited their 35/42 score to an advanced version of Gemini with Deep Think, an enhanced reasoning mode using parallel thinking to explore multiple solution paths at once. OpenAI emphasized that their breakthrough came from a general-purpose model using novel reinforcement learning techniques designed to handle hard-to-verify, open-ended tasks, not a bespoke math solver. These techniques will not be available in a public model for several months however and are not included in the upcoming GPT-5.

The common technical thread is the use of inference time scaling and more agentic LLM systems. These are not single, linear chains of thought. Like xAI's Grok 4 Heavy, these models likely use parallel sampling and other inference-time scaling techniques—running many attempts at a problem, comparing notes, and constructing a final, robust answer. This advanced approach delivers a step-change in capability for high-value tasks. Most of my LLM usage has already used a similar method for the past year, either by building an automated LLM feedback and LLM response iteration loop into LLM Workflows or by doing this manually in ChatGPT or Gemini.

The announcements also highlighted a growing rivalry. Google DeepMind collaborated directly with the IMO for official certification of their score and respectfully waited until after the human contestants were celebrated to share their results. OpenAI, in contrast, sparked controversy by announcing their 35/42 score immediately on Saturday, drawing criticism from the IMO community for overshadowing the human participants. Their model was not officially scored by the IMO; instead, its proofs were graded by three former IMO medalists.

While the IMO models represent the research frontier, OpenAI took a major step in bringing more, though less powerful, agentic capabilities to the masses with the launch of the ChatGPT Agent. This new feature unifies the strengths of previous tools like Operator (web browsing) and Deep Research (analysis) into a single, proactive system. In particular we are excited that the new model can interact with websites to refine results and access content requiring user authentication while using this to create in-depth Deep Research style analysis and reports. It operates within its own virtual computer, equipped with a browser, a terminal, and API connectors, allowing it to take on complex requests like "analyze three competitors and create a slide deck.” (Of course far better results will still come from providing far more detailed instructions and tips to the AI about how to perform this task!)

Having tested it, my impression is that it’s a significant milestone in potential but still very much a research preview in practice. On the positive side, its integration is seamless, and for the first time, it makes a true AI agent accessible to a mass market. When it works, it can successfully complete complex, multi-step tasks. The human-in-the-loop design, where you can interrupt or take over at any point, is also well-implemented. However, the experience is often hampered by significant slowness, not just from the model thinking but from waiting on website load times and other infrastructure bottlenecks. The agent's ability to interact with web elements can be inaccurate, and the overall user experience can feel more like a tech demo than a polished tool. We think this model can already provide a lot of value for many enterprise tasks, but still requires a frustrating amount of oversight and correction. It's a clear turning point, but at this stage I expect only AI power users to get productivity boosting results while widespread adoption will require more refinement.

Why should you care?

The ability for an AI to achieve a gold medal at the IMO is a signal that LLMs are beginning to master a new class of problem. The key breakthrough is generating intricate, watertight arguments in natural language. This requires a level of sustained, creative reasoning that was, until recently, considered years away. It validates the idea that breakthroughs in reinforcement learning are moving beyond tasks with simple, verifiable rewards (like winning a game) to the much harder domain of rewarding complex, open-ended intellectual work. This has profound repercussions. The real breakthrough isn’t that an LLM can solve Olympiad problems—it’s that we can now treat thought itself as a parallelizable search space, analogous to AlphaGo and AlphaFold. These models could accelerate research not just in pure math but in any field that relies on it, from physics to cryptography and drug discovery. Beyond research, these same techniques will also be applied to many high value work tasks across the economy.

However, this new power is computationally expensive. The agentic techniques used to crack IMO problems—parallel rollouts, multi-path reasoning—are what deliver the breakthrough reliability, but they come at a steep cost in terms of GPU time and latency. This will almost certainly lead to even further tiering of AI capabilities (beyond the $200-300 per month premium AI plans). We'll have fast, cheap models for everyday tasks and expensive, "heavy" agent modes that can be deployed for high-stakes problems that justify the investment. The ChatGPT Agent, despite its current flaws, is a glimpse of another “heavy” compute mode (Plus and Team subscribers only get 40 messages monthly) and a new way of working. This requires a new skill from us as users: learning to prompt becomes more like project management, where you guide, correct, and oversee an autonomous digital worker. Giving an agent a full browser plus terminal is power, but also an attack surface. Prompt‑injection proof‑of‑concepts can already trick Operator into leaking information. So there are new lessons to learn both on the effective usage and the safe usage fronts.

The journey from the experimental models that can win gold medals to polished, reliable everyday agents will be long and arduous. The technical hurdles, particularly in creating a robust interface with the messy, unpredictable web, are significant. While these new agentic systems are more “general” out of the box, we still think building custom Agents and Custom LLM Workflows will lead to the best results and products, and also ease adoption by fitting more smoothly into existing workflows. These products will use the same foundation models and techniques, but bring in specific end user knowledge and expertise for each use case and industry. These new capabilities all lead to further opportunities for those most skilled and experienced at building and using LLMs!

— Louie Peters — Towards AI Co-founder and CEO

Hottest News

OpenAI has added new agentic capabilities to ChatGPT, enabling users to delegate complex tasks such as calendar management, competitor analysis, and spreadsheet updates. With access to a virtual computer and tools like a visual browser and terminal, ChatGPT can now carry out these tasks more efficiently.

Mistral has introduced advanced features, including a deep research mode, multilingual reasoning, and improved image editing, in its Le Chat app. The deep research mode is designed to act as an intelligent assistant, clarifying queries, planning research, searching the web, and synthesizing detailed answers for both individual and enterprise users.

Mistral has unveiled Voxtral, its first family of audio models aimed at business applications. Voxtral can transcribe up to 30 minutes of audio, and thanks to its LLM backbone (Mistral Small 3.1), it can process up to 40 minutes, allowing users to query audio content, generate summaries, or convert voice commands into real‑time actions such as calling APIs or running functions.

Elon Musk’s chatbot Grok now offers AI companions, such as Ani, a goth anime character, and Bad Rudy, a 3D fox, available to “Super Grok” subscribers for $30 per month. This launch follows earlier controversies, including Grok generating antisemitic content, and raises questions about AI’s role in emotional support, echoing concerns seen with Character.AI.

Amazon has announced the preview of AgentCore, a suite of services to help developers deploy and manage AI agents at enterprise scale. Built on Amazon Bedrock and compatible with any model or framework, AgentCore addresses the growing demand for infrastructure that supports production-ready agents capable of reasoning, planning, acting, and learning with minimal human oversight.

Five 5-minute reads/videos to keep you learning

This three-part series explores the fine-tuning of open-source LLMs, specifically Llama 3.1 and Qwen 2.5, for complex text-to-SQL tasks. Using Guided Reward Policy Optimization (GRPO) over 1,600 hours, the project achieved significant improvements on simple and medium queries; however, both models continued to struggle with advanced SQL constructs, such as CTEs and temporal functions.

The article walks through a practical GraphRAG implementation that links documents using shared metadata attributes—demonstrated with an animal dataset where documents are connected by habitat. Compared to standard vector retrieval, this graph-based approach delivers more relevant, interconnected results and improves the contextual quality of generated responses.

This article compares two statistical approaches: univariate modeling, which treats each feature independently, and multivariate modeling, which preserves relationships between features. It explains how Kernel Density Estimation (KDE) and Bayesian Networks (BNs) support the latter, with a customer service call center use case illustrating their practical applications in high-dimensional settings.

This piece examines how Continual Reinforcement Learning (CRL) enables agents to learn over time through memory persistence, echoing human-like intuition and reflection. It surveys architectures such as external memory banks, Transformer-based agents, and generative World Models. It also explores training strategies like Hindsight Experience Replay (HER) and Self-Imitation Learning (SIL), which help agents retain knowledge and learn from past outcomes, addressing the challenge of catastrophic forgetting in traditional RL.

This walkthrough details the use of a U-Net convolutional neural network to segment nuclei in Triple Negative Breast Cancer (TNBC) histology images. It covers the full pipeline, from preprocessing RLE-encoded masks in a public dataset to model training and loss function selection (a combination of BCE and Dice loss). The results, presented through visual comparisons, show the model’s accuracy in identifying nuclei and validate its suitability for biomedical segmentation tasks.

Repositories & Tools

Open Deep Research is a simple, configurable, and fully open-source deep research agent that works across multiple model providers, search tools, and MCP servers.

ART optimizes multi-step agent training with GRPO by integrating RULER, which eliminates manual reward engineering.

WebAgent is an LLM-driven agent that learns from self-experience to complete tasks on real websites.

AutoDS is a method for open-ended ASD that drives scientific exploration using Bayesian surprise.

Top Papers of The Week

This survey defines Context Engineering as a formal discipline focused on optimizing contextual information for LLMs. It examines foundational components and their integration into systems such as RAG and multi‑agent frameworks. Despite progress, the authors note a persistent research gap in enabling models to produce sophisticated long‑form outputs, highlighted as a priority for future work.

This survey investigates how Retrieval‑Augmented Generation (RAG) strengthens LLMs by incorporating external knowledge. It identifies current limitations in multi‑step inference and analyzes both Reasoning‑Enhanced RAG and RAG‑Enhanced Reasoning approaches. The authors map emerging frameworks, highlight key challenges, and outline paths toward more effective, multimodal, and trustworthy RAG‑Reasoning systems.

This survey systematically deconstructs LLM agent systems through a methodology-centered taxonomy, linking architectural foundations, collaboration mechanisms, and evolutionary pathways. It brings together previously fragmented research, revealing fundamental links between design principles and the complex behaviors that emerge in agentic environments.

This paper introduces Master‑RM, a reward model trained on an augmented dataset of 20,000 adversarial responses, ranging from generic reasoning openers to meaningless statements labeled as invalid. Fine‑tuning with this dataset significantly reduced false positives across benchmarks like GSM8K, MATH, and NaturalReasoning.

This paper urges leading AI developers to examine what makes chains of thought (CoTs) “monitorable,” or transparent enough to understand how models derive their answers. The authors suggest CoT monitoring could become a key method for probing reasoning in LLMs, but warn that it is fragile and could be undermined by interventions that reduce transparency or reliability.

Quick Links

1. Anthropic has introduced a targeted transparency framework explicitly aimed at frontier AI models — those with the highest potential impact and risk — while deliberately excluding smaller developers and startups to avoid stifling innovation across the broader AI ecosystem. The proposal focuses on a narrow class of developers: companies that build models surpassing specific thresholds for computational power, evaluation performance, R&D expenditure, and annual revenue.

2. Reflection AI has introduced a new AI agent called Asimov. Unlike other coding assistants, Asimov is designed to analyze not just code, but also emails, Slack messages, project status reports, and other technical documentation to map out exactly how software is built. Reflection’s founding team includes CEO Misha Laskin and CTO Ioannis Antonoglou, both of whom previously worked at Google DeepMind.

3. AWS has launched Kiro, a specification-driven integrated development environment targeting the growing market for agentic coding tools. Kiro’s technical foundation is built on Code OSS, the open-source base of Visual Studio Code, ensuring compatibility with existing developer workflows.

Who’s Hiring in AI

AI Engineer @Informa Group Plc. (London, UK)

Senior Software Engineer, AI-Driven Performance Engineering @NVIDIA (Yokneam, Israel)

Staff AI Researcher- Generative AI @Aledade (Remote/ United States)

LMTS Backend Software Engineer @Salesforce (San Francisco, CA, USA)

ML Engineer @SmartAsset (Remote/ United States)

Generative AI Engineer @Sia (Mumbai, India)

Interested in sharing a job opportunity here? Contact sponsors@towardsai.net.

Think a friend would enjoy this too? Share the newsletter and let them join the conversation.