TAI #160: More Leaps in AI for Health; Drug Discovery and Diagnosis With Chai-2, AlphaGenome and MAI-DxO

Also, Veo-3 update, Amazon Robotics, Context Engineering, and more!

What happened this week in AI by Louie

While the discourse around AI (including our own!) is often dominated by language models and agents, this week brought a powerful reminder of another, perhaps more profound, revolution happening in parallel: the application of AI to the fundamental code of biology. LLMs are certainly making rapid strides in health themselves also—Microsoft's new MAI-DxO is a prime example of their power in complex reasoning. But this is part of a much larger trend. The new, custom foundation models for biology share many of the same core breakthroughs as LLMs, including transformer and diffusion architectures, and rely on the same infrastructure of GPUs, machine learning expertise, and software libraries. This unified technological wave is now being applied to digitize biology and medicine, shifting it from an empirical art to an engineering discipline.

A striking example of this shift came from Chai Discovery's paper on Chai-2, a multimodal generative model for de novo antibody design. Historically, finding a new antibody has been a stochastic process of screening millions of candidates. Chai-2 flips this paradigm. By designing fewer than 20 antibodies per target, it achieved a 16% hit rate—a figure over 100 times more efficient than previous computational methods. It found at least one successful binder for 50% of 52 novel disease targets, many chosen specifically because they had no known binders. This ability to reliably generate functional antibodies from scratch, compressing discovery timelines from years to weeks, marks a significant step toward a new era of rational, atomic-level molecular engineering.

At the same time, Google DeepMind unveiled AlphaGenome, a model designed to decipher the complex regulatory instructions within the 98% of the human genome that doesn't code for proteins. While its predecessor, AlphaFold, illuminated protein structures, AlphaGenome tackles the "how" and "when" of gene expression. By processing vast DNA sequences of up to a million base pairs at high resolution, it can predict how a single genetic mutation impacts thousands of molecular properties and processes like RNA splicing. This is a crucial tool for understanding the root causes of genetic diseases and identifying new therapeutic targets, moving us closer to a complete, functional map of our genetic instruction manual.

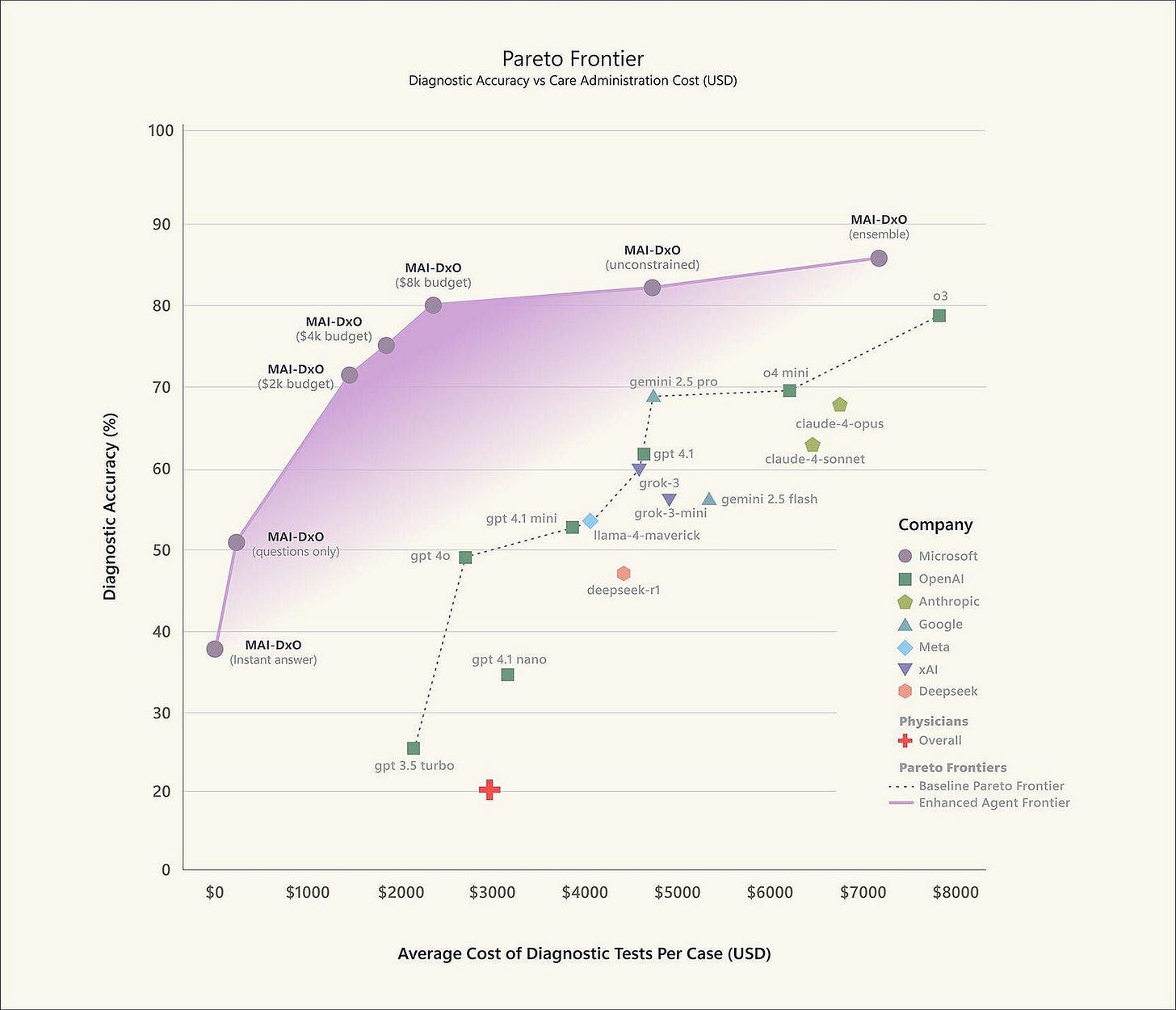

This progress in foundational science was matched by a leap in clinical application from Microsoft. To move beyond static benchmarks like the USMLE exam, they introduced the Sequential Diagnosis Benchmark (SDBench). This new benchmark transforms 304 complex cases from the New England Journal of Medicine into interactive diagnostic simulations where an AI must ask questions, order tests, and weigh costs—mirroring the complexity of real clinical decision-making. On this challenging benchmark, Microsoft tested its AI Diagnostic Orchestrator (MAI-DxO), a model-agnostic system that simulates a panel of virtual physicians. The results were formidable: using OpenAI’s o3, MAI-DxO achieved 85.5% diagnostic accuracy, more than four times higher than experienced human doctors, all while cutting diagnostic costs.

Grounding this new wave of AI for medicine research in commercial reality, Isomorphic Labs, Alphabet's drug discovery arm, announced it is staffing up and getting "very close" to putting its first AI-designed drugs into human clinical trials. Born from the AlphaFold breakthrough and bolstered by a $600 million external funding round in April and major partnerships with Novartis and Eli Lilly, Isomorphic's progress signals that this new generation of AI-driven science is rapidly moving from the lab to the clinic. While the level of AI involved can still be a blurred line (albeit a rapidly increasing one), Isomorphic Labs will join roughly 80 drug candidates whose core molecular design was generated with AI in Phase I-III human trials worldwide. Together, these advances offer a blueprint for how AI can help deliver precision and efficiency in healthcare.

Why should you care?

This week’s announcements are not isolated events; they represent the emergence of a cohesive, AI-native stack for medicine. Together, these tools form a powerful pipeline: AlphaGenome can help understand the genomic basis of a disease, Chai-2 and similar competing models can design a novel protein-based drug to treat it, and a system like MAI-DxO demonstrates how AI can apply this knowledge to diagnose patients with unprecedented accuracy. This is the digitization of biology in action. While these models alone won’t find and verify a new drug (more data needs to be collected for many areas of biology, and many lab experiments are still needed to verify and iterate predictions) , we think the emergence of these biology based foundation models could potentially be the catalyst for far more efficient drug design.

While these specialized models are at the cutting edge, LLMs are proving to be powerful tools for medical reasoning themselves. I’ve seen numerous credible reports of people using web research powered models like o3 to get a life-saving "second opinion," particularly for rare or complex diseases where a clear diagnosis is elusive. There have been stories of people correctly identifying time-critical conditions like meningitis after being dismissed by hospitals. Of course, one shouldn't take an LLM's output as medical truth, but as a source of well-reasoned questions and possibilities to discuss with a human doctor, it can be an invaluable tool for patient advocacy.

The bigger picture here is a fundamental paradigm shift. Traditional biology and medicine have been largely empirical sciences, reliant on observation, screening, and trial-and-error. The progress we're seeing now points toward a future where biology becomes a more deterministic engineering discipline. Instead of sifting through millions of random possibilities to find a drug, we can design it from first principles. Instead of a diagnosis being limited by a single physician's experience, it can be informed by the collective knowledge embedded in an AI. This transition, while less flashy than a new chatbot, holds the potential for some of the most profound impacts AI will have on society—tackling the core challenges of human health and longevity.

— Louie Peters — Towards AI Co-founder and CEO

This issue is brought to you thanks to SNYK:

Join Snyk and OWASP Leader Vandana Verma Sehgal on Tuesday, July 15, at 11:00 AM ET for a live session covering:

✓ The top LLM vulnerabilities

✓ Proven best practices for securing AI-generated code

✓ Snyk’s AI-powered tools automate and scale secure dev.

See a live demo, plus earn 1 CPE credit!

Hottest News

1. Ilya Sutskever Becomes CEO of Safe Superintelligence

Ilya Sutskever is now CEO of Safe Superintelligence, stepping in after Meta hired former CEO Daniel Gross as part of its AI talent push. Sutskever turned down acquisition offers to keep the company independent, with a focus on building safe AI systems. Co-founder Daniel Levy will assume the role of president, leading the technical team, while Sutskever will double down on the company’s original mission.

2. Perplexity Launches a $200 Monthly Subscription Plan

Perplexity is launching a new $200-per-month plan called Perplexity Max. The subscription includes unlimited access to its Labs tool for generating spreadsheets and reports, plus early access to upcoming products, most notably Comet, its AI-powered browser. Max users also get priority access to any services using top-tier models like OpenAI o3-pro and Claude Opus 4.

3. Google Rolls Out Its New Veo 3 Video-Generation Model Globally

Google is rolling out its Veo 3 video generation model to Gemini users in over 159 countries. The feature, available only through the AI Pro plan, allows subscribers to create up to three videos per day with text prompts. First introduced in May, Veo 3 can produce videos up to eight seconds long.

Anthropic has introduced Claude Code hooks, allowing users to customize behavior through user-defined shell commands integrated at key lifecycle points. These hooks ensure consistent actions for notifications, automatic formatting, logging, feedback, and permissions, enhancing control and automation. Users can receive input alerts, format files after edits, log command executions, and apply usage restrictions, ensuring compliance and adherence to conventions.

Amazon has announced the deployment of its one millionth robot, alongside the launch of DeepFleet, a new foundation model designed to optimize its robotic systems. DeepFleet is designed to coordinate warehouse robots more efficiently, and Amazon says it has already improved fleet speed by 10%.

6. TNG Technology Consulting Releases R1T2 Chimera

TNG Technology Consulting has released DeepSeek-TNG R1T2 Chimera, a new Assembly-of-Experts model designed to combine speed and performance. Built by merging three high-performing models, R1–0528, R1, and V3–0324, R1T2 shows how expert-layer blending can enhance LLM efficiency. In benchmarks, it runs over 20% faster than R1 and more than twice as fast as R1–0528.

Five 5-minute reads/videos to keep you learning

1. Context Engineering for Agents

This article explores context engineering, the practice of carefully managing the information that fills the context window as an AI agent navigates a task. It introduces four core strategies: writing new content, selecting relevant information, compressing details, and isolating critical data. Through examples from widely used agents and research papers, the post explains how these techniques shape agent behavior and performance.

This guide walks through building multi-agent systems using LangGraph to handle complex, multi-step tasks. It introduces three key design patterns: the Network pattern for direct agent-to-agent collaboration; the Supervisor pattern, where a central agent delegates tasks; and the Hierarchical pattern, which scales the supervisor model for larger systems. The article also provides hands-on Python examples for the Network and Supervisor patterns, showing how specialized agents like researchers and coders can collaborate through “handoffs” that transfer tasks and data smoothly between agents.

3. Implementing Tensor Contractions in Modern C++

This technical deep dive explains how to implement tensor contractions, a fundamental operation in many AI models, using modern C++. Starting with matrix multiplication, it expands to higher-dimensional tensors, showing how to define output dimensions and compute coefficients based on intersecting indices. The post includes a full C++ implementation from scratch, covering data structures, index mapping, and computational logic, along with optimization tips like multi-threading for better performance.

4. The Silent Threats: How LLMs Are Leaking Your Sensitive Data

This article highlights the hidden data leakage risks associated with LLMs. It covers Timing Side-Channel Attacks (which use response delays to infer inputs), Backdoor Attacks (where models are manipulated to release sensitive data), and Prompt Injection (which hijacks model instructions to extract information). The post also discusses defenses, such as cache isolation, stricter rate limits, and stronger safety alignment, emphasizing the need for more comprehensive security practices in LLM development.

5. Human-in-the-Loop (HITL) with LangGraph: A Practical Guide to Interactive Agentic Workflows

This piece demonstrates how to build Human-in-the-Loop (HITL) workflows with LangGraph, allowing humans to guide and intervene in agent tasks. It shows how HITL lets users pause execution, review or edit an agent’s actions, and even rewind to earlier points for debugging. With clear examples and code snippets, it makes the case for integrating HITL as a way to improve the safety, efficiency, and reliability of autonomous systems through well-placed human oversight.

Repositories & Tools

1. Trae Agent is an LLM-based agent for general-purpose software engineering tasks.

2. OmniGen 2 is a multimodal model that features two distinct decoding pathways for text and image modalities.

3. ERNIE is a new family of large-scale multimodal models comprising 10 distinct variants.

4. CasualVLR is a Python open-source framework for causal relation discovery and causal inference.

5. Symbolic AI is a neuro-symbolic framework that combines classical Python programming with the differentiable, programmable nature of LLMs.

Top Papers of The Week

This study finds that fine-tuning large language models on math reasoning tasks using reinforcement learning (RL) improves generalization to both reasoning and non-reasoning tasks, whereas supervised fine-tuning (SFT) often reduces overall capabilities. Through analyses of latent-space principal components and token distributions, the authors trace this difference to SFT-induced representation drift. They propose RL fine-tuning as a more reliable approach for developing transferable reasoning skills in LLMs.

2. Wider or Deeper? Scaling LLM Inference-Time Compute with Adaptive Branching Tree Search

This paper presents AB-MCTS, an inference-time algorithm based on Monte Carlo Tree Search, traditionally used in board games like Go. The method adapts this approach to language tasks by applying Thompson Sampling to decide whether to continue refining a current answer or explore an alternative response path. Unlike fixed-temperature sampling or deterministic prompting, this adaptive branching technique lets the model allocate computation to the most promising parts of the solution space in real-time, enabling more flexible and efficient reasoning during inference.

3. GLM-4.1V-Thinking: Towards Versatile Multimodal Reasoning with Scalable Reinforcement Learning

GLM-4.1V-Thinking improves multimodal reasoning by using Reinforcement Learning with Curriculum Sampling, boosting performance across a wide range of tasks, including STEM problem-solving and video analysis. The model outperforms Qwen2.5-VL-7B on nearly all benchmarks and matches or exceeds the performance of much larger models, such as Qwen2.5-VL-72B and GPT-4o. Offered as an open-source model, it provides competitive results and establishes a new performance baseline for versatile multimodal reasoning.

4. Do Large Language Models Need a Content Delivery Network?

This paper explores whether LLMs could benefit from a dedicated content delivery layer. The authors argue that, beyond fine-tuning and in-context learning, managing knowledge through KV caches could enable both more modular control over knowledge updates and a more efficient model serving at lower costs and faster speeds. They introduce the concept of a Knowledge Delivery Network (KDN), a system component that optimizes how KV cache data is stored, transferred, and composed across LLM instances and related compute or storage resources.

Quick Links

1. DeepMind subsidiary preps first human trials of AI-designed drugs. Isomorphic Labs, spun out of Google DeepMind, is on the verge of beginning human clinical trials for cancer treatments designed using its AlphaFold-based AI. President Colin Murdoch stated that the company is “getting very close” to this milestone, marking a significant step in leveraging AI to accelerate drug discovery and potentially revolutionize healthcare.

2. Chai Discovery Team introduces Chai-2, a multimodal AI model that enables zero-shot de novo antibody design. Achieving a 16% hit rate across 52 novel targets using ≤20 candidates per target, Chai-2 outperforms prior methods by over 100x and delivers validated binders in under two weeks , eliminating the need for large-scale screening.

3. OpenAI is distancing itself from Robinhood’s latest crypto push after the trading platform began offering tokenized shares of OpenAI and SpaceX to users in Europe. “These ‘OpenAI tokens’ are not OpenAI equity,” OpenAI wrote on X. Users from the U.S. cannot access these tokens due to regulatory restrictions.

Who’s Hiring in AI

Research & Community Manager — Gaming AI @Microsoft Corporation (Redmond, WA, USA)

Conversational AI Designer @General Motors (Multiple US Locations)

Engineering Manager — Gen AI @HighLevel (Remote)

BRAIN AI Researcher @WorldQuant (Yerevan, Armenia)

Senior Machine Learning Scientist (Generative AI) — Viator @Tripadvisor (Remote/Hybrid)

Junior Software Engineer, GenAI @Algolia (Remote)

Generative AI Engineer @Radian Guaranty, Inc. (Remote)

Interested in sharing a job opportunity here? Contact sponsors@towardsai.net.

Think a friend would enjoy this too? Share the newsletter and let them join the conversation.