TAI #111; What Does Deepseek's 10x Cheaper Reused LLM Input Tokens Unlock?

Also; Inference costs outpacing Moore's law, Gemini Pro 1.5 tops LMSYS, Character AI acqui-hire, Gemma 2, FLUX.1, Zamba2.

What happened this week in AI by Louie

This week, our eyes were again on the rapid progress in LLM inference, in particular, the possibility of significantly reducing the cost for reused input tokens with context caching. We might labor this point a bit much, but the progress in inference compute prices for LLMs is truly unprecedented. It is now possible to access reused input token inference with Deepseek v2 for 4,300x cheaper than GPT-3 (da-vinci 002) cost just 24 months ago. At the same time, the MMLU benchmark is up to 79% vs 60%, and the context window maximum size is up ~60x. At the peak of Moore’s law, $ per transistor reduced around ~4,000x in the first 14 years up to 1982. But transistors were not getting fundamentally more capable at the same time! At this stage, it is hard to imagine progress at this pace not soon having a global impact. The innovations in context caching this week tie into a great new paper investigating how LLM performance can benefit from repeated inference steps, or “inference-time scaling laws”. Together, we think these provide a very powerful new avenue for unlocking economically useful LLM capabilities.

Deepmind followed META’s week in the spotlight with a flurry of activity. Gemini released a new Pro 1.5 experimental model, which, for the first time, put Deepmind at the top of the LMSYS arena, suggesting they have finally caught up in the LLM capability race on some measures (but still behind on Livebench and Zeroeval benchmarks). They also announced the Flash model that will reduce 5x in price next week (taking it to half of GPT-4o-mini cost), a move we think is partly a reflection of progress in distillation but also likely competitive pressure from Llama 3.1. They also released an impressive (for its size) new small 2B Gemma model benefiting from model distillation (which we expect to join the LLM builder toolkit post Llama 3.1, as we discussed last week).

Less than 24 after the Gemini Flash price announcement, inference compute pricing was taken a whole level lower with China-based DeepSeek announcing Context Caching on Disk via their API. This automatically reduces the cost of handling reused input tokens by 90%, down to $0.014 / million tokens, making it 10x cheaper than GPT-4o-mini. The caching mechanism works by storing input content it expects to be reused in a distributed disk array. When the same input is detected again, it is retrieved from the cache, bypassing recomputation. This not only slashes API costs but cuts down on latency (from 13 seconds to just 500 milliseconds for large 128k prompts). This cost reduction opens up new avenues for using LLMs in scenarios where repeated querying of the same input tokens is essential, such as multi-step data analysis of a large dataset, repeated questioning of a full code base, and multi-turn conversations. Deepmind Gemini is the only other model that offers context caching so far, but the price reduction is not nearly as large and it is not implemented automatically. However, the imminent 5x reduction in Gemini Flash price will help here, too!

In parallel to these developments on inference price, new research on inference-time scaling laws suggests that we can significantly improve LLM performance by increasing the number of inference steps. This approach, known as repeated sampling, allows weaker models to outperform stronger ones in certain tasks. For example, DeepSeek-Coder-V2-Instruct, when applied with 250 attempts, achieves a 56% success rate on SWE-bench Lite, surpassing the 43% success rate of a single attempt using more capable models like GPT-4o. The effectiveness of this method depends on two factors: coverage (the number of problems solved across all attempts) and precision (the ability to identify the correct solution among many).

Why should you care?

These advancements are synergistic. The cost savings from reused input tokens make it economically feasible to employ repeated sampling extensively, even with complex and large datasets. We expect this to make some agentic LLM systems far more feasible, both in terms of cost and latency. These far cheaper reused input context tokens also allow for affordable multi-shot learning — leveraging vast amounts of examples within input prompts as a potential alternative to fine-tuning. This can also sometimes be more efficient for inference, where the same model can be used with different input prompts and large batch sizes on the same GPU cluster, compared to running multiple fine-tuned models. It can also make long context windows with cached context a more viable alternative to RAG in some scenarios, for example, chatting with entire documentation in cached context. The reduced latency from context caching can also improve the user experience for very long-turn chat applications or repeatedly questioning a code base or dataset.

We think context caching is in its early days, and Deepseek’s API still struggles with its access to compute (likely due to the US AI chip China ban); however, we expect leading AI labs to focus on replicating these capabilities. Before long, we think LLM builders can expect access to 5–10x price reductions for reused input context and should begin to consider what this can unlock for their use cases. What would you do differently if system prompts were 10x cheaper than other tokens and up to 20x faster to process?

— Louie Peters — Towards AI Co-founder and CEO

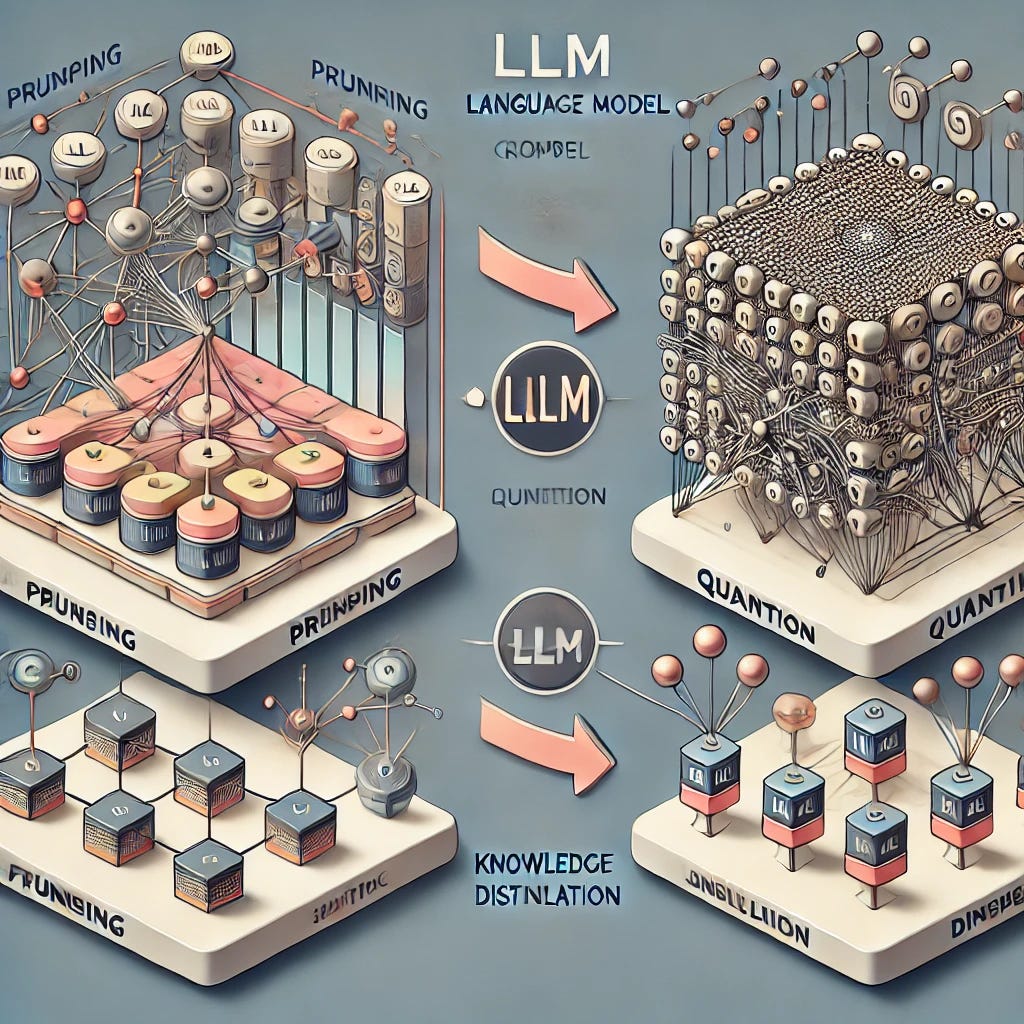

The evolution of LLM compression methods: from QuIP to AQLM with PV-Tuning

While large language models open up a world of possibilities, they can also be incredibly expensive and complex to deploy. That’s why researchers are racing to find ways to compress these models without sacrificing performance. Learn about the evolution of extreme LLM compression methods in our latest article. Spoiler alert: a brief “rivalry” between two research teams led to scientific breakthroughs.

Read the complete article on Extreme LLM Compression here!

Hottest News

1. Musical Chairs at the Leading AI Labs?

OpenAI has continued a recent streak of setbacks on key talent, with co-founder John Schulman announcing that he is leaving to join Anthropic, returning to hands-on technical work and focusing on AI alignment. Greg Brockman also announced he is on sabbatical from OpenAI until the end of the year. Meanwhile, Google Deepmind acquired the 30-person model training team from Character AI, bringing transformer co-inventor Noam Shazeer back to Google. This looks to us like a $2.5bn acquihire structured to reward remaining Character employees and investors as Character pivots to building upon external foundation models. We think this is more evidence of the difficulty of raising enough capital to train foundation models that can compete, even when focusing on niche applications. But it also shows the value of key AI talent!

2. DeepSeek API Now Launches Context Caching on Disk

DeepSeek has implemented Context Caching on Disk technology. This approach automatically caches frequently referenced contexts on distributed storage, reducing API costs for reused input by up to 90%. For a 128K prompt with high reference, the first token latency is cut from 13s to just 500ms.

Deepmind released Gemma 2 2B, a very impressive small model that is small enough to fit onto a smartphone and still offers GPT 3.5 levels of performance. It beats both GPT 3.5 and Mixtral on the chatbot arena and scored 51.3 on 5-shot MMLU. Gemini achieves this performance in a small model via model distillation from a larger model.

4. European Artificial Intelligence Act Comes Into Force

The European Artificial Intelligence Act (AI Act), the world’s first comprehensive regulation on artificial intelligence, has entered into force. The AI Act is designed to ensure that AI developed and used in the EU is trustworthy, with safeguards to protect people’s fundamental rights. The majority of the rules of the AI Act will start applying on 2 August 2026, while the rules for General-Purpose AI models will apply after 12 months.

5. Stable Diffusion Creators Launch Black Forest Labs, Secure $31M for FLUX.1 AI Image Generator

Black Forest Labs, a startup founded by the original creators of Stable Diffusion, unveiled its brand new FLUX.1 text-to-image model suite. FLUX.1 has 12 billion parameters and utilizes a hybrid architecture of multimodal and parallel diffusion transformer blocks. It comes in three variants: the closed-source FLUX.1 [pro] available via API, the open-weight FLUX.1 [dev] for non-commercial use, and FLUX.1 [schnell], a faster version released under an Apache 2.0 license for personal and local development.

6. Zyphra Released Zamba2–2.7B: An Efficient and Faster Small Language Model

Zyphra released Zamba2–2.7B, a hybrid Mamba2 (State Space Model) and transformer model demonstrating a significant advancement in efficiency and performance. The model is trained on Zyphra’s proprietary dataset of approximately 3 trillion tokens, which allows it to match the performance of larger models like Zamba1–7B and other leading 7B models. This follows several models, such as Samba, recently showing these hybrid Mamba models can be competitive with transformers at large training compute budgets with ~2–4bn parameters and ~3trn token scale. We are excited to see if these can be scaled up to hundreds of billions of parameters!

7. Cohere Released Cohere Prompt Tuner For Prompt Optimization

Cohere introduced Prompt Tuner, a customizable optimization and evaluation loop to refine prompts for generative language use cases. The evaluation criteria are customizable, and the results help guide an instruction generation model to generate a new proposed prompt. It is available to everyone in beta on the Cohere Dashboard.

8. AI Investment Driving Capex Growth at US Cloud Leaders

The companies behind the major US cloud computing platforms all accelerated capex in Q2, driven in part by GPU purchases and data center land, leases, and construction. Amazon’s overall capex increased to $17.6B (+54% year on year), Microsoft to $13.9B (+55%), Google to $13.2B (+91%), and Meta to $8.5B (+36%). We expect this is driven by both inference data centers and training clusters for LLMs, but the companies also have other AI use cases for GPUs, such as recommender systems.

Five 5-minute reads/videos to keep you learning

1. Why Is Llama 3.1 Such a Big Deal?

This post addresses 11 critical questions about Llama 3.1 for managers and leaders, such as why the open-source nature of Llama 3.1 is beneficial compared to closed-source models, the available integrations with public cloud providers, deployment infrastructure, the advantages of Llama 3.1 in terms of performance, cost, and potential cost savings, and more.

2. Built-In AI Web APIs Will Enable a New Generation of AI Startups

The latest and highest-scoring frontier models are almost indistinguishable from the general user’s point of view. At the same time, the cost of training frontier AI models keeps rising while improvements are marginal. This blog observes various frontier AI models to solidify the trend that some customers may only need small models, some will need big models, and many will want to combine both in various ways.

In AI, we have newer and improves models, plenty of investments, improving infrastructure, lowering costs, but we haven’t seen proportionate revenue and productivity gains. This essay highlights the major AI developments between March and June to underline how AI Engineers can bridge the gap from capabilities to product.

The article highlights how present-day models can help you learn and automate boring tasks. This author provides a list of 50 conversations that he (a programmer and research scientist studying ML) has had with different large language models to improve their ability to perform research and work on coding side projects.

5. Mastering ‘Advanced’ Prompting: Because Overcomplicating Things Is an Art Form

This article focuses on the current problem with prompting. It will cover the basics, some “advanced” techniques, and, most importantly, burst the myths around prompting. As the authors put it, “Despite all the hype around “advanced” prompting techniques, it’s really just about telling the model what you want in plain language.”

Repositories & Tools

1. ZeroEval is a simple unified framework for evaluating LLMs on various tasks.

2. ScrapetoAI helps you extract data from websites for your custom GPTs.

3. Trace is a new AutoDiff-like tool for training AI systems end-to-end with general feedback.

4. Every Programmer Should Know is a collection of (mostly) technical things every software developer should know about.

5. AgentScope helps you build LLM-powered multi-agent applications in an easier way.

Top Papers of The Week

1. Gemma 2: Improving Open Language Models at a Practical Size

This official paper introduces Gemma 2, a new addition to the Gemma family of lightweight open models, ranging in scale from 2 billion to 27 billion parameters. This version has several technical modifications to the Transformer architecture, such as interleaving local-global attentions and group-query attention.

2. MoMA: Efficient Early-Fusion Pre-Training With Mixture of Modality-Aware Experts

This paper introduces MoMa, a modality-aware mixture-of-experts (MoE) architecture for pre-training mixed-modal, early-fusion language models. Under a 1-trillion-token training budget, the MoMa 1.4B model, featuring 4 text experts and 4 image experts, achieves impressive FLOPs savings: 3.7x overall, with 2.6x for text and 5.2x for image processing.

3. MindSearch: Mimicking Human Minds Elicits Deep AI Searcher

This paper introduces MindSearch, which mimics human minds in web information seeking and integration and can be instantiated by a simple LLM-based multi-agent framework. MindSearch demonstrates significant improvement in response quality in terms of depth and breadth on both close-set and open-set QA problems.

This research evaluated LLMs’ performance on two question types: numeric (correlating findings) and semantic (differentiating entities), while examining differences within and between LLMs in medical aspects and comparing their performance to humans. It tested Claude 3 and GPT-4 against six medical experts and found that both LLMs excelled more in semantic than numerical QAs, with Claude3 surpassing GPT4 in numerical QAs.

5. Reconciling Reality Through Simulation: A Real-to-Sim-to-Real Approach for Robust Manipulation

This paper proposes RialTo, a system for robustifying real-world imitation learning policies using reinforcement learning in “digital twin” simulation environments constructed from small amounts of real-world data. RialTo quickly scans and constructs digital twins of real-world environments and implements an “inverse distillation” procedure for bringing real-world demonstrations into simulated environments, with minimal human intervention and engineering.

Quick Links

1. Unexpected design flaws have forced Nvidia to push deliveries of Blackwell GPUs back to early next year. The production issue was discovered by manufacturer TSMC and involves the processor die that connects two Blackwell GPUs on a GB200.

2. OpenAI introduced ChatGPT’s new advanced voice mode to a small group of ChatGPT Plus subscribers. Various clips of the feature in action have appeared online, demonstrating its ability to sing, imitate accents, correct language pronunciation, and perform narrative storytelling.

3. GitHub launches integrated Model Playground for AI developers. This new capability allows developers to explore various models within the GitHub web interface and they can also test and compare different models without leaving their current environment.

Who’s Hiring in AI

AI Engineer @IBM (Bengaluru, India)

Research Scientist, Generative AI @Google DeepMind (Mountain View, CA, USA)

Staff Generative AI / ML Engineer @MasterClass (San Francisco, CA, USA)

Sr. Generative AI & ML Specialist Solutions Architect @Amazon (Irvine, CA, USA)

API Technical Lead (Java) @Capco (Poland/Remote)

Junior Data Scientist @Amaze Software Inc. (USA/Remote)

AI Intern @Finz Limited (London, UK/Hybrid)

Interested in sharing a job opportunity here? Contact sponsors@towardsai.net.

This is fascinating. Thank you so much for keeping me informed. https://geometrygame.io