Cheat Sheet: What Most Teams Miss When Building with LLMs

Lesson 2 now free: RAG, Structured Outputs, Fine-Tuning

Everyone starts with prompts.

But if you've ever built beyond a toy project, you've probably hit this wall:

The model sounds fluent but the answers are off.

The output looks good until it breaks your parser.

The demo works but doesn’t scale.

The fix? It’s not always fine-tuning. In fact, it’s almost never the first step.

That’s exactly what we walk through in Session 2 of our 10-Hour LLM Video Primer, now free to watch.

Too busy to sit through two hours? Here's the distilled cheat sheet:

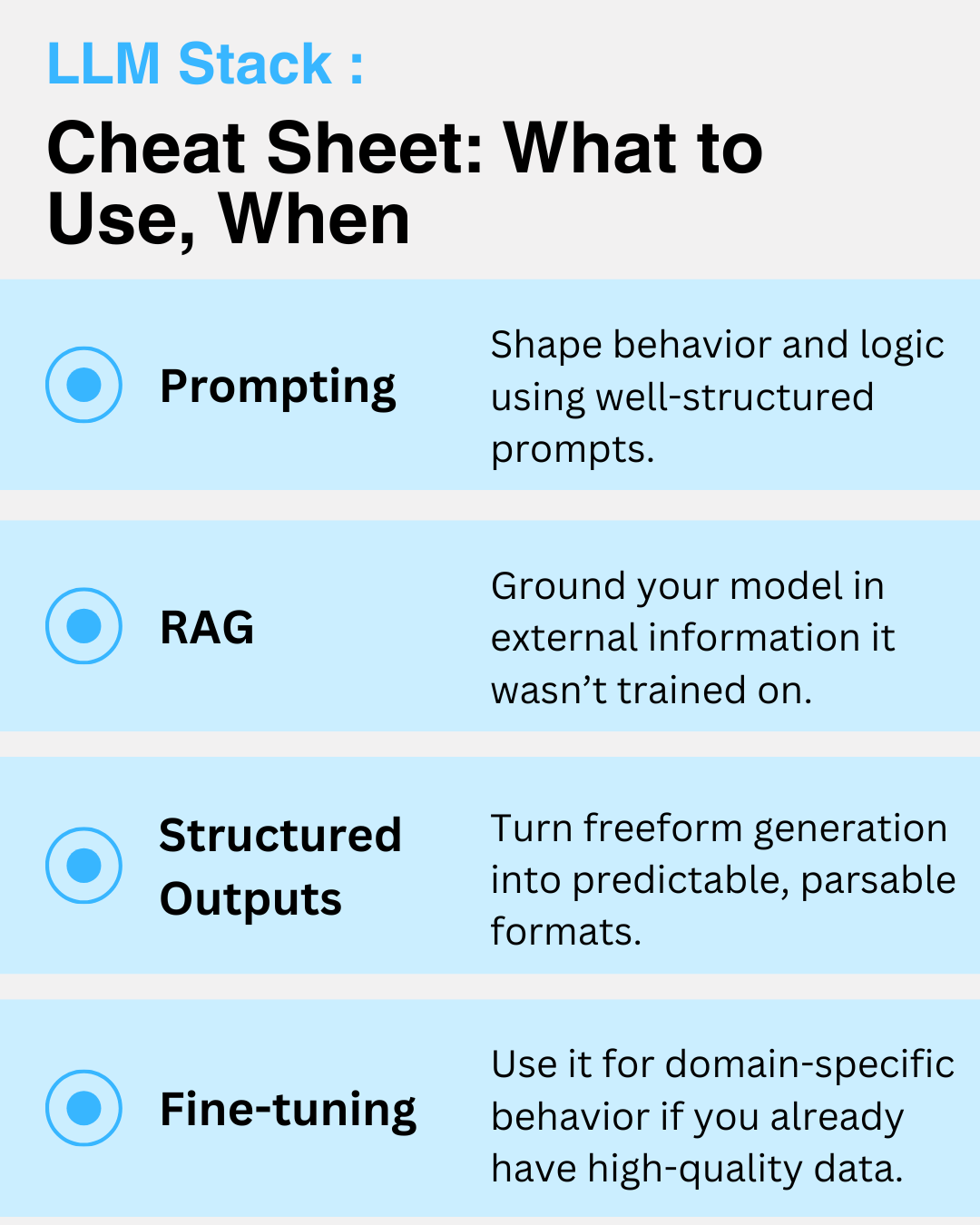

LLM Stack Cheat Sheet: What to Use, When

1. Prompting: Your starting point

Shape behavior and logic using well-structured prompts.

Start with: Zero-shot, few-shot, instruction formatting

Use when: General tasks, exploration, lightweight workflows

Next step if it fails: Move to RAG, not fine-tuning

2. RAG: Inject real, dynamic knowledge

Ground your model in external information it wasn’t trained on.

Tools: LangChain, LlamaIndex, vector DBs (FAISS, Pinecone, Chroma)

Core strategies:

Smart chunking

Metadata indexing

Query rewriting

Verification loops

Multi-step reranking

Summarization + enrichment

Why it matters: Reduces hallucinations and brings domain context

3. Structured Outputs: Make answers reliable

Turn freeform generation into predictable, parsable formats.

Use when: Your system depends on clean integration or automation

Techniques:

Schema-constrained prompting

Grammar-based decoding (e.g., Context-Free Grammar)

Tools: Outlines, Pydantic (Python), Zod (JS/TS)

4. Fine-Tuning: Only when everything else falls short

Use it for narrow tasks, tone control, or domain-specific behavior, if you already have high-quality data.

Approaches: SFT, LoRA, QLoRA, RLHF, DPO, GRPO

Use cases:

Replacing domain-specific rules

Injecting unlearned behavior

Personalization at scale

Consider: Time, compute, eval pipeline; ROI must be clear

Bonus: Real-World Infrastructure

These aren't extras — they're essentials once you ship:

Evaluation: Use BLEU, ROUGE, perplexity, plus human-in-the-loop tests. Measure continuously.

Cost & Latency: Use context caching (CAG) to avoid redundant token usage; supported by OpenAI & Gemini

Tool Orchestration: Chain LLMs with APIs, agents, and conditional logic

Model Selection:

Use Gemini for long-context

o3 or GPT-4-turbo for multi-step reasoning

4.1-mini or Gemini Flash for lightweight use cases

We’ve expanded the entire production pipeline into a full 10-hour course built for developers and builders working on real-world LLM applications. In the next sessions, you’ll walk through:

Evaluating LLMs with automated metrics (BLEU, ROUGE, perplexity) and human-in-the-loop testing

Understanding agent workflows, tool use, orchestration, and how to manage cost/latency trade-offs

Applying core optimization and safety practices like quantization, distillation, RLHF, and injection mitigation

By the end, you’ll know how to build, evaluate, automate, and maintain LLM systems that hold up in production, not just on a notebook.

“Outstanding resource to master LLM development.”

“Helped me debug and design with confidence.”

“Gave me the mental model I didn’t know I was missing.”

The full course is available now at launch pricing ($199).

P.S. If you missed it, lesson 1 is also still free.

Thanks for this insightful cheat sheet! It really highlights the often-overlooked aspects of building with LLMs. Quick question—how do you recommend teams balance innovation and user trust in their projects?

https://moto-x3m.io

Great breakdown of the real LLM stack - this does a solid job of showing why prompting alone isn’t enough and when RAG, structured outputs, or fine-tuning actually make sense in practice. https://retrobowlplus.com